I'm already pushing "double" to its limit of precision.

"float" has less than half the precision of "double" so that would require more than 4x the work.

Actually, because of the way the algorithm works, using type "float" would require MUCH more than 4x the work. It would actually fail above a certain (small) size.

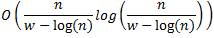

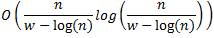

The run-time complexity is this:

where "n" is the # of digits.

and "w" is the # of bits of precision in the floating-point.

When the denominator goes to zero, the run-time (and memory) blows up to infinity - in other words, the algorithm fails.

This is the reason why I can't use GPU.

If there was a 128-bit floating-point type that was supported by hardware, the program would actually be MUCH faster.

EDIT: That complexity is just a reasonable approximation to the true complexity.

The true complexity (ignoring round-off error), has special functions in it... so it's unreadable to normal people. (even myself)

Reply With Quote

Reply With Quote

Bookmarks