What AGESA does the 9905 ASUS BIOS use?

What AGESA does the 9905 ASUS BIOS use?

Don't believe in miracles like 50-100% boost by only flashing new BIOS.

i5 2500K (L041C124) @ 5GHz + Scythe Mugen 2 rev. B | ASRock P67 Extreme4 B3 UEFI L3.19 | ADATA 2x4GB DDR3 1600 | MSI Radeon RX 470 4GB | 2x Crucial m4 64GB SSD RAID 0, Seagate 7200.12 500GB, Samsung F4 EG 2TB | 24" HP LP2475w | EVGA SuperNOVA G2 750W | Fractal Design Define R3 | Windows 10 64 bit

Testing games at such low resolution is complete fail and I'm not listening to the BS that it puts the emphasis on the cpu. Benchmarks are supposed to be indicative of real life performance and nobody uses fast graphics cards and cpu's at low resolution. Furthermore, by not benching at enthusiast-level resolutions you run the risk of failing to fully stress the cpu's in that role and will give a false impression as to how much better or worse a certain cpu is at gaming. There's no excuse for it.

so you think they way to test CPU performance in games is by limiting the overall performance by the GPU? like AMD did on their slides (HD6870 1080p)?

I think there is value in both methods, no one is hiding anything, clearly the FX is more than good enough to play these games, as a Phenom II X4 is or i3 2100 are,

but you need to consider that they probably had limited time for testing, and that maybe a dual gpu configuration can have the same performance difference as this on a higher res, or a new GPU next year and so on...

ideally we could have more test situations varying the resolution, and perhaps more well known CPU intensive games, but anyway, I think by now everybody knows that gaming is mostly limited by GPU if you have a good enough CPU,

but still is a valid test, showing what the CPUs can achieve under these conditions,

With todays high end video cards people are more often cpu limited than they think.

People say it's supposed to be a cpu benchmark but there are plenty of those in reviews. It's supposed to be a cpu benchmark in *gaming*. That means you don't negate the graphics card.

It is quite likely that SB will score 50% higher fps than Bulldozer in a game like Dirt 3 at low res, but lose by 5-10% at 1080p. What is the better gaming cpu?

.:. Obsidian 750D .:. i7 5960X .:. EVGA Titan .:. G.SKILL Ripjaws DDR4 32GB .:. CORSAIR HX850i .:. Asus X99-DELUXE .:. Crucial M4 SSD 512GB .:.

Last edited by jimbo75; 10-09-2011 at 09:43 AM.

Looks like the retail pricing turns out to be the best avg benchmark for cpu performance.

Would have been nice to see some 2nd pass x264 results.

I was going to build a BD rig but I'll probably wait and see what PD brings to the table at this point.

Both Intel and AMD are going to need to deliver something more compelling, I'm just not feeling the excitement to upgrade.

Work Rig: Asus x58 P6T Deluxe, i7 950 24x166 1.275v, BIX2/GTZ/D5

3x2048 GSkill pi Black DDR3 1600, Quadro 600

PCPower & Cooling Silencer 750, CM Stacker 810

Game Rig: Asus x58 P6T, i7 970 24x160 1.2v HT on, TRUE120

3x4096 GSkill DDR3 1600, PNY 660ti

PCPower & Cooling Silencer 750, CM Stacker 830

AMD Rig: Biostar TA790GX A2+, x4 940 16x200, stock hsf

2x2gb Patriot DDR2 800, PowerColor 4850

Corsair VX450

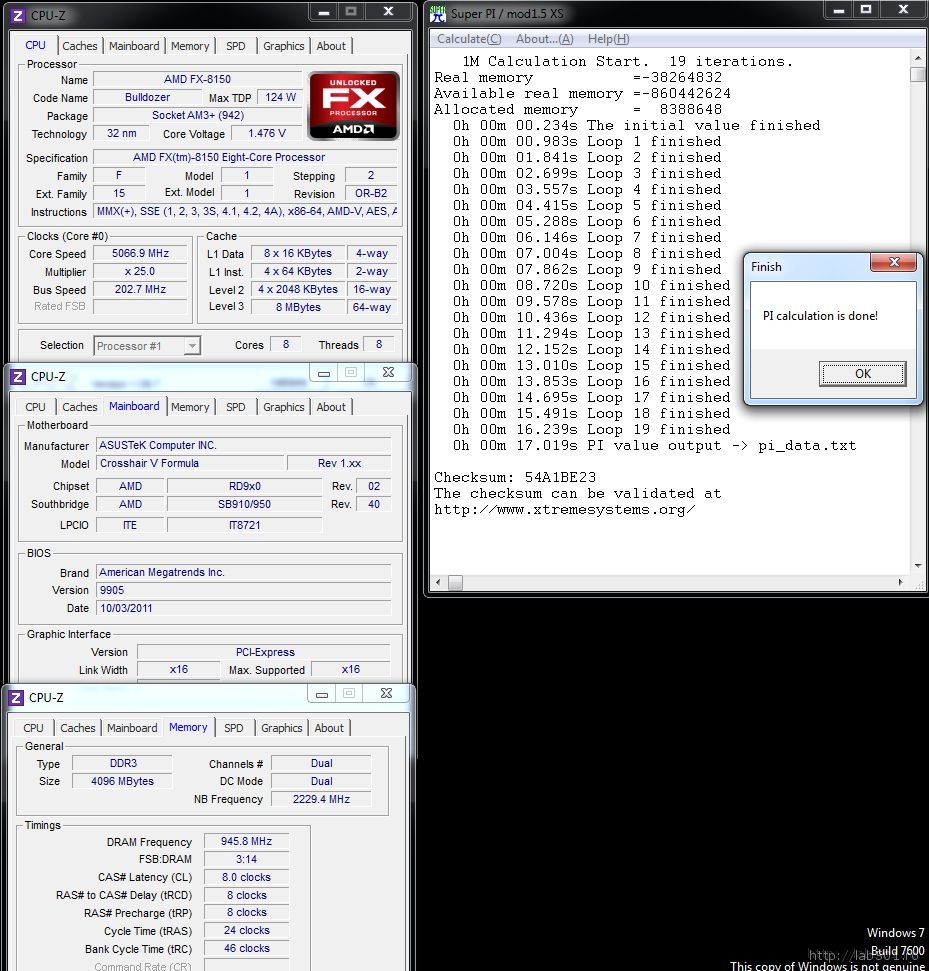

Finally legit preview of bulldozer! Can't say the same for windows 7.

:P

Can't wait, 3 more days to see more!

Last edited by redrumy3; 10-09-2011 at 09:55 AM.

STEAM

Corsair H80

i5 2500K 4.5Ghz 1.33v

8GB GSkill Sniper DDR3-1600 1.25v

Gigabyte Z68XP-UD3P F7 | XFX 6970 2GB

64GB Adata SSD | 2 x Seagate 7200.12 500GB Raid 0

Corsair 750TX | Asus Xonar Essence STX

Sennheiser HD-555 Mod | Asus VH242HL

Logitech G9x | Logitech G15v2

Corsair Vengeance C70 | Windows 8 Pro 64-Bit

If AMD are calling people tomorrow they are probably trying to ensure this low-res gaming skullduggery isn't happening, which imo is their right as they are simply ensuring that the gaming benchmarks are representative of the cpu's while gaming. Also, if BD is strong in minimum framerates then AMD would want that message to be getting across, which is also well within their rights.

Any site using low res gaming benchmarks has an axe to grind with AMD, or an intel shill. Why do you think the unnamed blogger benchmarked Unigine at low res to "prove" his point? I've never seen anyone else do that, like ever. But even at that BD was massively better in minimum framerates.

Last edited by Maxforces; 10-09-2011 at 10:42 AM.

have you even read any recent reviews?

Most bigger review sites state in there test methods why they use lower resolutions for games and often also provide benches in full resolution and also mention that this is not real life scenario..

And here you have a perfect example why testing with realworld resolutions doesn't tells you anything about the cpu at all... you only test the gpu:

fc2800.giffc21280.giffc21600.gif

Granted this test is older (march 2010 from when the X6 was released and tested with a HD5870) you would think if you look at "real world resulsts" all cpus are pretty much even, but what happens when you plug in a dual 580 instead a 5870... you suddenly run into the gpu limitation again and your "even" cpu gets smacked hard.

Both test have validity, its just that recently people negate low res test for whatever reason...

Judging by Combined average intel system will have higher minimum on average ,without fraps FPS history graph . those minimum and maximum mean nothing ,all it takes is one split second dib and benchmarking program will register it as minimum , rest of the benchmark sandy will have higher fps then buldozer.

.:. Obsidian 750D .:. i7 5960X .:. EVGA Titan .:. G.SKILL Ripjaws DDR4 32GB .:. CORSAIR HX850i .:. Asus X99-DELUXE .:. Crucial M4 SSD 512GB .:.

What exactly is the point in benchmarking at such low resolutions? RE2 and HAWX are 50% faster on SB than BD in these tests. Is that representative of the other results? Is that representative of a gaming benchmark?

Or is it simply representative of a useless benchmark of a game at low resolution while giving no indication of how the chips perform during actual gaming?

Do you think that might be because nobody games at 800x600 on enthusiast level hardware?Both test have validity, its just that recently people negate low res test for whatever reason...

Last edited by jimbo75; 10-09-2011 at 10:33 AM.

It's not to directly assess real gaming performance as it is to showcase and isolate the cpu's ability to pump fps rather than showing how well the video card performs.

Turning up the resolutions shifts the bottleneck to the gpu, if you want to bench the cpu you need to isolate it from gpu limitations.

Most review sites do a range of resolutions and settings anymore and when NDA is over you won't have anything to complain about.

Work Rig: Asus x58 P6T Deluxe, i7 950 24x166 1.275v, BIX2/GTZ/D5

3x2048 GSkill pi Black DDR3 1600, Quadro 600

PCPower & Cooling Silencer 750, CM Stacker 810

Game Rig: Asus x58 P6T, i7 970 24x160 1.2v HT on, TRUE120

3x4096 GSkill DDR3 1600, PNY 660ti

PCPower & Cooling Silencer 750, CM Stacker 830

AMD Rig: Biostar TA790GX A2+, x4 940 16x200, stock hsf

2x2gb Patriot DDR2 800, PowerColor 4850

Corsair VX450

As we are here, CB has a nice articel to this..

http://www.computerbase.de/artikel/g...frameverlaeufe

If you jack up resolution and AA/AF its pretty much irrelevant what cpu you use. It all depends on the GPU... on a HD6850 even a PII X2 produces nearly the same framerates as a 2600K@4,5ghz...

So why not benchmark it to see if that's true or not?

If SB is 50% faster than BD then why is it not 50% faster in anything else (except superpi :p)? Why only very low resolution gaming? It is obvious to me that low res gaming is not indicative of actual cpu performance. It's an abberation.

Again, Anandtech's cpu benches arety.

I HAVE NEVER PLAYED CRYSIS on MEDIUM SETTINGS like they use in thatty review. THEY ARE ALSO USING A NOW ANCIENT GTX280 in that review. To top it all off they also don't even mention minimum framerates.

ANANDTECH'S CPU BENCHMARKS ARE USELESS.

They might as well just post a 3d mark bench.

It is indicative of the cpu's ability to generate game & frame data for the gpu. If you use high or low resolution the cpu still generates the same game and frame data but at high resolution the cpu is limited by the gpu's speed for rendering the frames which means all you see is more of a gpu limitation than a cpu limitation at higher resolutions.

In the end the cpu that can pump the highest fps period, raw cpu speed unhindered, has more processing power but that doesn't mean it translates into raw performance for other workloads.

Last edited by highoctane; 10-09-2011 at 10:51 AM.

Work Rig: Asus x58 P6T Deluxe, i7 950 24x166 1.275v, BIX2/GTZ/D5

3x2048 GSkill pi Black DDR3 1600, Quadro 600

PCPower & Cooling Silencer 750, CM Stacker 810

Game Rig: Asus x58 P6T, i7 970 24x160 1.2v HT on, TRUE120

3x4096 GSkill DDR3 1600, PNY 660ti

PCPower & Cooling Silencer 750, CM Stacker 830

AMD Rig: Biostar TA790GX A2+, x4 940 16x200, stock hsf

2x2gb Patriot DDR2 800, PowerColor 4850

Corsair VX450

Here is what I saw in actual gameplay with the settings that I actually use not somety canned bench like what Hornet just posted like thats supposed mean something to me.

This is what I would expect from a "professional review" I should have left out the maximum framerate though.

This is an i5 750 at 4ghz and an i5 2500k at 4.7ghz.

Last edited by BababooeyHTJ; 10-09-2011 at 10:53 AM.

Testing prefetching on the cpu.

Most games have one heavy render thread, only BF3 and some other are using multithreaded rendering (a dx11 feature). Most games are using half or less than half of total CPU power. For most games data is rather static, maps etc are data loaded from disk and not modified during gameplay.

The main work will be calculating dots sent to the gpu. Long trains of data is calculated and sent to the gpu in chunks with commands informing the gpu how to work with those dots.

The amount of work the gpu needs to do for each frame is much less on low resolutions. It could be faster than the cpu and then it starts to wait for the cpu to process all dots.

Prefetching will increase performance a lot working with long trains of data. The cpus guess where the next data will be and fetches that while current data is being processed.

i5/i7 prefetches data to L1 and L2 cache, phenom is only using the L1 with some small prefetching functionality.

This is the reason why i5/i7 can produce much more fps on low resolutions. It's about prefetching and a fast cache.

If the game are using more threads and maps are dynamic and complex. Data isn't at all that predictable and prefething isn't as important any more.

Bookmarks