-=The Gamer=-

MSI Z68A-GD65 (G3) | i5 2500k @ 4.5Ghz | 1.3875V | 28C Idle / 65C Load (LinX)

8Gig G.Skill Ripjaw PC3-12800 9-9-9-24 @ 1600Mhz w/ 1.5V | TR Ultra eXtreme 120 w/ 2 Fans

Sapphire 7950 VaporX 1150/1500 w/ 1.2V/1.5V | 32C Idle / 64C Load | 2x 128Gig Crucial M4 SSD's

BitFenix Shinobi Window Case | SilverStone DA750 | Dell 2405FPW 24" Screen

-=The Server=-

Synology DS1511+ | Dual Core 1.8Ghz CPU | 30C Idle / 38C Load

3 Gig PC2-6400 | 3x Samsung F4 2TB Raid5 | 2x Samsung F4 2TB

Heat

Could you check back in this thread for a special build, I'm trying to find out why some are failing on WMI.

Meanwhile could you post the MB, OS, tweaks (if any) and connected drives (all sorts of media)

Hi Tilt, long time no see

Same goes for you, I need to find out why it fails on WMI on your system as well so could you list just the basics.

It's fully legal to use cache, as long as it's mentioned

Not very hard to tell anyways.

I'll display it as part of the info, shouldn't be that hard to detect.

So, what are the most known ones?

-

Hardware:

Yes, your result is largely cached. Run an 8GB testfile and watch your score drop. The larger the testfile the lesser part of the test is cached.

Anvil - it shouldn't be too hard to detect cache. For example, results over 92ish MB/s in QD1 4KB reads must be cached since nothing can do more than that. If it is not an Iodrive/ACARD then over 40mb/s is not possible unless it is a cached result. You could make the read results cache resistant by creating the test file, then making another 4GB testfile, and then testing the reads on the original testfile. This way the results should be real/represent the actual storage device instead of DRAM.

Or make testfile, ask for a reboot, then test the reads after reboot but that would be annoying as hell.

There isn't an easy way.

Last edited by One_Hertz; 08-05-2011 at 08:08 AM.

I was simply thinking of listing it *if* a supplementary cache was installed.

(It is detectable in the registry)

I might do some extra level of testing for cache later though.

So besides Fancy-Cache what other popular supplementary caches are used?

-

Hardware:

Great work Anvil, looks like it has tons of potential!

But it seems like my results are strangely jumping around in performance compared to something like IOMeter..

Could use a few usability tweaks, and it always rewrites a test file when I run the test..

Do you have any more info on the program settings?

Anyway here's a result from single SF-2500:

"Red Dwarf", SFF gaming PC

Winner of the ASUS Xtreme Design Competition

Sponsors...ASUS, Swiftech, Intel, Samsung, G.Skill, Antec, Razer

Hardware..[Maximus III GENE, Core i7-860 @ 4.1Ghz, 4GB DDR3-2200, HD5870, 256GB SSD]

Water.......[Apogee XT CPU, MCW60-R2 GPU, 2x 240mm radiators, MCP350 pump]

Here you go. I will get the make and model for HDD and DVDRW later.

ASRock P67 Extreme4 1.90

Core i5 2500K / no OC

G.Skill Ripjaws 1600 4x2Gb / no OC

HD6950 2GB / no OC

Intel Gigabit CT PCIe

M-Audio Delta 2496

Intel x-18M 2x80Gb

Hitachi 2TB SATA2 internal on Marvell

DVDRW SATA2 internal on Marvell

Fantom USB 2TB

Antec Quattro 850W

Antec 1200

Win7 64 bit

http://www.superspeed.com/ is another one

Last edited by F@32; 08-05-2011 at 10:23 AM.

Sony KDL40 // ASRock P67 Extreme4 1.40 // Core i5 2500K //

G.Skill Ripjaws 1600 4x2Gb // HD6950 2GB // Intel Gigabit CT PCIe //

M-Audio Delta 2496 // Crucial-M4 128Gb // Hitachi 2TB // TRUE-120 //

Antec Quattro 850W // Antec 1200 // Win7 64 bit

9265 can do that @ QD1 R5. actually 54 @ QD1If it is not an Iodrive/ACARD then over 40mb/s is not possible unless it is a cached result.

these results with C300. I bet these 8 x 128 wildfires can beat this. only 512 cache on this card when test was done, and it is direct i/o anyways (FP uses no cache)

LINK

when i mention caching coming into play in the header above that, i am speaking to the seq write speed.

@Anvil- i would like the option to retain a static test file as well. This would be helpful. Even with the *now* well-known uber longevity of these drives, no need to speed along degradation in raid arrays.

-also one last wish 512B @ QD 128 so i can show 465,000 IOPS

doing some long-run tests this week for a article, but i will put the toys up and do some playing soon so i will post some results.

The achitecture of RAID controllers and how they fundamentally operate, and thus low QD performance, are about to be turned on their heads.....12Gb/s plugfest is going to bring about a change in RAID controllers that is going to be just unbelievable.results over 92ish MB/s in QD1 4KB reads must be cached since nothing can do more than that.

I will say this...The Fusions/ I/O Extremes, etc, will soon see the playing field changed very dramatically.

Last edited by Computurd; 08-05-2011 at 06:25 PM.

"Lurking" Since 1977

Jesus Saves, God Backs-Up *I come to the news section to ban people, not read complaints.*-[XC]GomelerDon't believe Squish, his hardware does control him!

That result is partially cached. Iometer with a very large test file will not show those numbers. You can see from the latency that the real QD1 random reads are around 32MB/s for that config of yours. Edit: you even show yourself that the real QD1 RR number is 31MB/s later in the review...

RAID controllers are not able to increase 4k QD1 random read performance above what the SSDs themselves are capable of because it is impossible since we are just talking about raw latency here; i.e. the time it takes your devices to respond to a small block read command. The absolute best a controller can do is not add any overhead on top of that. It can not make any device respond faster than they are capable of no matter the kinds of voodoo magic you believe in

Last edited by One_Hertz; 08-05-2011 at 08:04 PM.

even if i kill chickens?It can not make any device respond faster than they are capable of no matter the kinds of voodoo magic you believe in

Last edited by Computurd; 08-06-2011 at 05:30 AM.

"Lurking" Since 1977

Jesus Saves, God Backs-Up *I come to the news section to ban people, not read complaints.*-[XC]GomelerDon't believe Squish, his hardware does control him!

use a heavier beater will get more better results maybe

TRU/i7920@4.0...AsusP6X58D...6G/Gskill(1603)...EvgaGtxTITAN...80GIntelG2...X-FI/680z...CorsairHX1000...thermaltakeXIII...HP LP-3065...8.1pro x64

TRU/i7920@3.6...AsusP6TWSPRO...12G/OCZ...XFX5770...2x64GSamSungSLC/Areca1110 raid0...2.8TB storage...SeasonicX650...CoolerMaster810...HP LP-3065...8.1pro x64

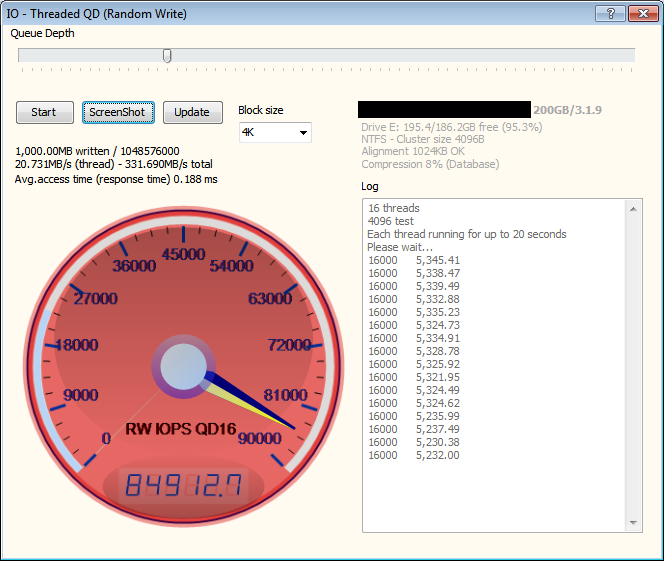

Single SF-2500 drive

Last edited by zads; 08-07-2011 at 05:15 PM.

"Red Dwarf", SFF gaming PC

Winner of the ASUS Xtreme Design Competition

Sponsors...ASUS, Swiftech, Intel, Samsung, G.Skill, Antec, Razer

Hardware..[Maximus III GENE, Core i7-860 @ 4.1Ghz, 4GB DDR3-2200, HD5870, 256GB SSD]

Water.......[Apogee XT CPU, MCW60-R2 GPU, 2x 240mm radiators, MCP350 pump]

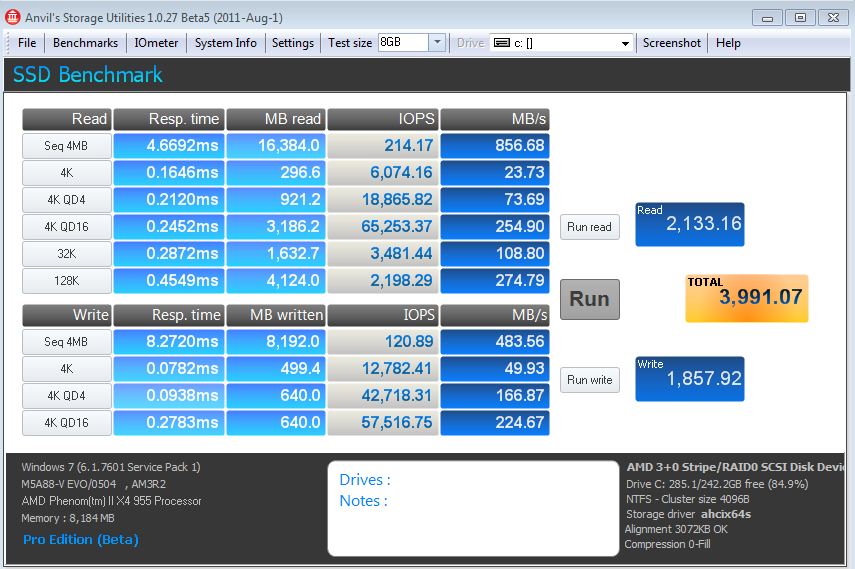

3x m4 128GB R0 settled state

I ran the test twice. Both times I got a "Not Responding" error right at the beginning, but the test started back up after about 10 seconds. Not sure what numbers I "should" be getting, so I don't know the effect of that brief pause.

Great tool otherwise. Amazing work Anvil.

Just tried out the beta and had no issues running the benchmark with default settings. Nice benching utility Anvil.

C300 128GB running on Dell E6420 laptop:

C300-CTFDDAC128MAG_128GB_1GB-20110809-1217.png

Last edited by bigretard21; 08-09-2011 at 08:21 AM.

nice result you big retard!

sorry...had to say it once

"Lurking" Since 1977

Jesus Saves, God Backs-Up *I come to the news section to ban people, not read complaints.*-[XC]GomelerDon't believe Squish, his hardware does control him!

^^

24/7 Cruncher #1

Crosshair VII Hero, Ryzen 3900X, 4.0 GHz @ 1.225v, Arctic Liquid Freezer II 420 AIO, 4x8GB GSKILL 3600MHz C15, ASUS TUF 3090 OC

Samsung 980 1TB NVMe, Samsung 870 QVO 1TB, 2x10TB WD Red RAID1, Win 10 Pro, Enthoo Luxe TG, EVGA SuperNOVA 1200W P2

24/7 Cruncher #2

ASRock X470 Taichi, Ryzen 3900X, 4.0 GHz @ 1.225v, Arctic Liquid Freezer 280 AIO, 2x16GB GSKILL NEO 3600MHz C16, EVGA 3080ti FTW3 Ultra

Samsung 970 EVO 250GB NVMe, Samsung 870 EVO 500GBWin 10 Ent, Enthoo Pro, Seasonic FOCUS Plus 850W

24/7 Cruncher #3

GA-P67A-UD4-B3 BIOS F8 mod, 2600k (L051B138) @ 4.5 GHz, 1.260v full load, Arctic Liquid 120, (Boots Win @ 5.6 GHz per Massman binning)

Samsung Green 4x4GB @2133 C10, EVGA 2080ti FTW3 Hybrid, Samsung 870 EVO 500GB, 2x1TB WD Red RAID1, Win10 Ent, Rosewill Rise, EVGA SuperNOVA 1300W G2

24/7 Cruncher #4 ... Crucial M225 64GB SSD Donated to Endurance Testing (Died at 968 TB of writes...no that is not a typo!)

GA-EP45T-UD3LR BIOS F10 modded, Q6600 G0 VID 1.212 (L731B536), 3.6 GHz 9x400 @ 1.312v full load, Zerotherm Zen FZ120

OCZ 2x2GB DDR3-1600MHz C7, Gigabyte 7950 @1200/1250, Crucial MX100 128GB, 2x1TB WD Red RAID1, Win10 Ent, Centurion 590, XFX PRO650W

Music System

SB Server->SB Touch w/Android Tablet as a remote->Denon AVR-X3300W->JBL Studio Series Floorstanding Speakers, JBL LS Center, 2x SVS SB-2000 Subs

Bookmarks