Regarding the X25-V, Intel says:

http://download.intel.com/design/fla...hts/322736.pdf

So 365 x 5 x 20 = 36.5TB

Regarding the X25-V, Intel says:

http://download.intel.com/design/fla...hts/322736.pdf

So 365 x 5 x 20 = 36.5TB

The key word there is "minimum". What is being measured is more like maximum, or at least typical maximum.

endurance is not a predetermined moment in time guys. thats a big point here. it is when the unrecoverable bit errors pass a certain pre-defined threshold. but that threshold itself is not a finite moment. it depends upon the quality of ECC, etc.

here is a document you MUST read seriously it opened my eyes big time. Once you read this you will realize that their definition of endurance is WAY below the usable threshold of the drive.

from idf 2011 a few months back\

Solid-State Drives (SSD) in the Enterprise: Myths and Realities

it is session SSDS003. read the PDF please i beg youA01 linked it above, but no one read it apparently.

https://intel.wingateweb.com/bj11/sc...og.jsp?sy=8550

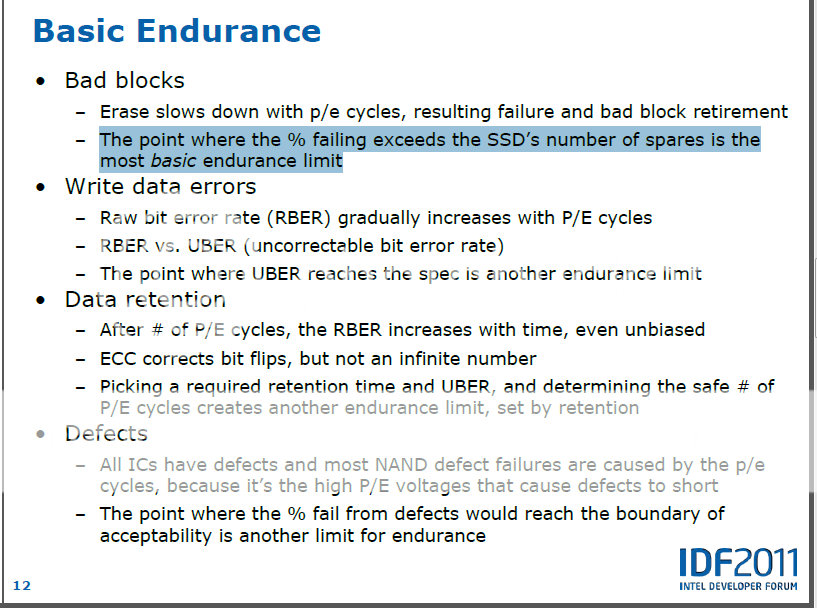

endurance is defined as:

Last edited by Computurd; 05-11-2011 at 08:41 PM.

"Lurking" Since 1977

Jesus Saves, God Backs-Up *I come to the news section to ban people, not read complaints.*-[XC]GomelerDon't believe Squish, his hardware does control him!

That is presentation is about endurance at the SSD level. The 5K P/E spec is at a lower level, that of the flash integrated circuits.

correct. but we are discussing endurance of the SSD here. as a whole. the nand is only as good as the controller, so for those too lazy to read i will put up a few shots here.

First: they arent testing the drive correctly. the key parameter here is retention of data for a long period of time. not sure how well this device is retaining data at this point. so the test is a fun test, but not accurate.

Second: You must understand that the endurance numbers for Intel SSDs are extreme worst case scenarios. they bake the freaking drive for gods sake, then test retention. as i keep saying over and over, extreme worst case scenario.

Last edited by Computurd; 05-11-2011 at 07:44 PM.

"Lurking" Since 1977

Jesus Saves, God Backs-Up *I come to the news section to ban people, not read complaints.*-[XC]GomelerDon't believe Squish, his hardware does control him!

im on a rant here, so follow along

here we show that increasing the LBA range does in fact hamper the performance of the drive, and reduce endurance. so that is why these drives can take a tremendous amount more than they are marketed as.

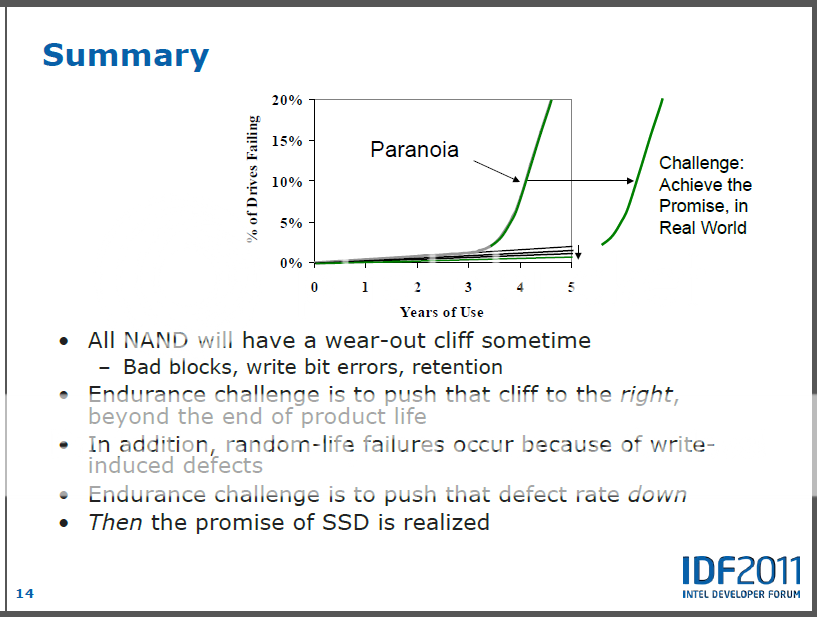

and the one below it is the bathtub curve of paranoia. fits this topic perfectly imo.

overall we are looking at 3 percent failure of the device at extreme circumstances in order to fall below the threshold of endurance. which is ridiculously high standards. the drives can survive long long long after that, and be usable. especially for us normal people LOL

"Lurking" Since 1977

Jesus Saves, God Backs-Up *I come to the news section to ban people, not read complaints.*-[XC]GomelerDon't believe Squish, his hardware does control him!

There is no "correct" way to test a drive. It depends on what you are testing. I think it is reasonable to test by writing to the drive until it starts giving write errors. At that point, it would also be interesting to test whether all the data can be read back, and to let it sit for a while and test again. But just testing how much you can write is a reasonable test.

As for baking the drives, I think you misunderstand the test. They bake the drives to do accelerated lifetime testing. The basic idea is that you come up with a formula that says, say, 400 hours at 85C is equivalent to 30,000 hours at room temperature. That is how they can spec values at 5 years when the prototype is only a few months old. It does NOT mean that Intel is saying that the parts can work for 5 years at the elevated temperature.

i understand the process. much like they test many many other devices.They bake the drives to do accelerated lifetime testing.

im not inferring that they are spec'ing the drive at those temps. they have their own temp specs rated.

the point is this: they are doing accelerated writes in a very small amount of time (worst case scenario again) and doing it under extreme conditions that 99 percent of the drives will never see.

note: also they do non-accelerated room temp testing. they cover both bases here.

yes this would be the most interesting, because that is the root cause of nand death...it cant hold the electron anymore...but if it can hold it short periods of time thats easy, but with gate degradation that is where the ability to maintain long term retention should become comprimised, at least initially. that would be the beggining stages i would imagine.extended torture write testing, then baking, then non accelerated room temp testing, THEN doing a bit error test after extended rest time-- should just about do it and catch the error rate under pretty much any scenario.At that point, it would also be interesting to test whether all the data can be read back, and to let it sit for a while and test again.

"Lurking" Since 1977

Jesus Saves, God Backs-Up *I come to the news section to ban people, not read complaints.*-[XC]GomelerDon't believe Squish, his hardware does control him!

It would be nice to know the overall size of the file. If it was quite small and only wrote to a small span of the drive then effectivly the rest of the drive was OP - which could explain it.

Regarding that health status on CDM I have no idea how that it calculated. If the drive is functioning correctly (no errors) does that make it healthy? The wear out indictor is working, but just because the drive is close to the end of its life does that make it unhealthy?

Hard to tell but it looks like the largest that test file could have been was ~280MB

• 100KB 80% 10 ~ 100KB 80% (System thumbnails and the like)

• 500KB 10%100 ~ 500KB accounted for 10% (JPG images and the like)

• 5MB 5% 5% 1 ~ 5MB (big picture, MP3 and the like)

• 45MB 5% 5% 5 ~ 45MB (video clips and the like)

5 x 45MB = 225MB

1 x 5MB = 5MB

100 x 500KB = 48.82

10 x 100KB = 0.97

Total = 279.79MB

Last edited by Ao1; 05-12-2011 at 12:32 AM.

Agreed. All companies make mistakes. The distinction is how companies fix those mistakes. The only complaint I would make against Intel is the lack of TRIM support for G1 drives, other than that its hard to fault them. (In fairness they never said TRIM would be provided, but it was petty minded not to).

Also a big difference between a geniune mistake and a deliberate deception.

Hi Anvil,

Intel think they can reproduce the experiment and are willing to try. They are open to suggestions from XS on how to run the experiment.

http://communities.intel.com/message/124701#124701

interesting the link to the other test, where another person is almost at a PB! i would chalk this up to intels being very conservative in estimates, the extreme low tolerance for failure in testing, and the conditions of the testing. very interesting nonetheless! makes me feel safer about write testing already

http://botchyworld.iinaa.net/ssd_x25v.htm

"Lurking" Since 1977

Jesus Saves, God Backs-Up *I come to the news section to ban people, not read complaints.*-[XC]GomelerDon't believe Squish, his hardware does control him!

I'm in. Since I will be buying a new SSD to do this, will it make more sense to buy a different brand so perhaps we can do a comparison between them to see which fails first? Unfortunately I don't think we can do Sandforce because it is my understanding that it will throttle writes down to nothing and won't let me kill it... Perhaps a small M4?

Thats great One_Hertz

I'll have to check on the m4, there is probably an issue wrt retrieving "Host Writes" on both the C300 and the m4. (WD doesn't support/report host writes either)

The SandForce and Intels are both supporting this and some report both host read and host writes.

There is probably a way to circumvent the SF throttling issue but it would require cleaning the drive at least once a week. (only takes a few seconds using the toolbox)

I cannot guarantee that the cleaning would work though.

I'll check the Crucials and let you know...

-

Hardware:

Sounds good. I am not sure we can entirely get around the SF write throttling... I could get an Intel 320 40GB for just ~100$ right now. A 64GB M4 would be ~150$.

We do not NEED to have the SMART info giving us the writes. If it is done via IOMeter, then IOMeter will provide us with the amount of writes (unless the whole system decides to crash upon SSD failure, which may happen).

It would be interesting to see an X-25V (34nm) vs a 320 (23nm).

How will you run the test? With static data? How large a test file overall? What size files? Random vs sequential? TRIM/ no TRIM?

I've checked and there are some metrics on both the C300 and the m4 that are of use

05 - Reallocated sectors count

AA - Growing Failing Block Count

AD - Wear Levelling Count

BB - Reported Uncorrectable Erors

BD - Factory Bad Block Count

C4 - Reallocation Event Count

C5 - Current Pending Sector Count

CA - Percentage Of The Rated Liftime Used

These are reported by the CDI 4.0 Alpha. (if the naming is correct)

Host writes arent reported and it doesn't look like it can be calculated using known/official methods?

The Intel 320 would be great as it's a 25nm drive.

As you say, there are other methods of recording Host Writes but SMART would be the better option.

I'm working on a storage benchmark/utility and I could easily integrate both reporting and the actual process of generating the writes, logged to another drive

This is the readout using the latest CDI Alpha

c300_smart_info.jpg

m4_smart_info.jpg

edit

@Ao1

Not sure yet about how but thats open for discussion

Last edited by Anvil; 05-12-2011 at 09:50 AM.

-

Hardware:

I just ordered a 40GB 320.

The test file should be about 75% of the size of the SSD? That seems realistic to me. Nobody keeps their SSD empty, but nobody has theirs full either. It should be some sort of mix of various blocks sizes with emphasis on 4k... mix of random and sequential.

Ao1 - you had logged 2 weeks of activity at some point using hiomon. Where is this info again?

Without checking log files I'd have a guess at something like this:

- 4K 80%/ 80% sequential/ 20% random

- 8K 10%/ 50% sequential/ 50% random

- 1k 2% /Random

- 1MB 3% /Sequential.

- 3MB 2.5% / Sequential

- 6MB 2.5% / Sequential

Maybe 50% static data and a total file size of 25%?

Sandisk wrote an article about this http://www.sandisk.com/media/65675/LDE_White_Paper.pdf

One statistic of interest is the distribution of request size of the writes, which is as

shown in Figure 2a for our sample user trace. Note that over 53% of the writes are for 8

sectors (4KB); 8.9% are for 128 sectors (64KB), 5.7% are for 16 sectors (8KB), and

4.9% are for 32 sectors (16KB). However, note that 16.7% are not multiples of 4KB.

Why would they reference a trace from a non 4K aware OS (XP) using a HDD with only 1GB of RAM?

Nice info though.I see they say 29% of all write commands are sequential.

Everyone's going to have a different workload. At least this one is documented. Just a shame it was on an antiquated set up.

Well, I've had fancycache statistics menu running for about six hours, it has tracked roughly 3GB of total writes, which works out to 12GB a day. With fancycache write cache, writes to disk are cut down to almost half(~6.76GB/day,) which means an X25-V would last ~15 years with my usage and setup.

Bookmarks