no. both digital VRM and traditional step-down buck converter, can output any voltage from 0 - 12V. For both digital, and analog component (ie cap, inductor, diode/MOSFET rectifier) selection determines max current, transient response (ie ripple current) etc.

Caps are high and block heatsinks. AMD digital VRM is better for heatsinks. Multiple-phase solutions are easier to link up. And can better manage switching... ie reduce voltage/clocks/current if overheating.

Its kind of like old school jumper/BIOS overlocking vs using setFSB in Windows - the later obviously easier and more convenient.

I've been wrong but I dont think so. TSMC 40nm is still far from "mainstream" and quite expensive. Only reason for AMD to drop down to $300, would be if Fermi launched at $300 - infinitesimally improbable.

24/7: A64 3000+ (\_/) @2.4Ghz, 1.4V

1 GB OCZ Gold (='.'=) 240 2-2-2-5

Giga-byte NF3 (")_(") K8NSC-939

XFX 6800 16/6 NV5 @420/936, 1.33V

► ASUS P8P67 Deluxe (BIOS 1305)

► 2600K @4.5GHz 1.27v , 1 hour Prime

► Silver Arrow , push/pull

► 2x2GB Crucial 1066MHz CL7 ECC @1600MHz CL9 1.51v

► GTX560 GB OC @910/2400 0.987v

► Crucial C300 v006 64GB OS-disk + F3 1TB + 400MB RAMDisk

► CM Storm Scout + Corsair HX 1000W

+

► EVGA SR-2 , A50

► 2 x Xeon X5650 @3.86GHz(203x19) 1.20v

► Megahalem + Silver Arrow , push/pull

► 3x2GB Corsair XMS3 1600 CL7 + 3x4GB G.SKILL Trident 1600 CL7 = 18GB @1624 7-8-7-20 1.65v

► XFX GTX 295 @650/1200/1402

► Crucial C300 v006 64GB OS-disk + F3 1TB + 2GB RAMDisk

► SilverStone Fortress FT01 + Corsair AX 1200W

Does anyone think dual GPU Fermi is possible at all (GTX470, and downed clock speeds like the 5970) without requiring a nuclear power plant and generating as much heat equivalent to the surface of the sun?

Antec 900

Corsair TX750

Gigabyte EP45 UD3P

Q9550 E0 500x8 4.0 GHZ 1.360v

ECO A.L.C Cooler with Gentle Typhoon PushPull

Kingston HyperX T1 5-5-5-18 1:1

XFX Radeon 6950 @ 880/1300 (Shader unlocked)

WD Caviar Black 2 x 640GB - Short Stroked 120GB RAID0 128KB Stripe - 540GB RAID1

No Fermi is the first mass market GPU architecture to have:

#1: Parallel geometry setup

#2: Generalized, coherent read/write caching

Both are huge deals because of the engineering effort required and make a lot of things easier to do. Of course it doesn't mean squat if you just care about the fps that comes out the end.

My recommendation to ATI would be to adopt/adapt the CUDA api from nvidia and get on with it. Let's be honest, nvidia has the clout to raise a stink big enough for everyone to notice. Their connections run deep.

Unless ATI is planning an API release secretly. I really hope they aren't, waste of resources.

@triniboy: I'll check it out. This gives me something to research

Last edited by damha; 03-05-2010 at 11:44 AM.

I believe ATi could adapt CUDA easily, if they want to.

But i agree with you, we need a common API for assessing a GPU (just like AMD and Intel CPU that csn run a sett og common instructions and programs).

Somebody has to provide a common platform to take this further to the next step where everybody can have a personal supercomputer, but these guys (nVidia and ATi) are too busy fighting each other, and Intel will actually see both of the dead and defeated in this area, Because a great GPGPU platform can threaten the Intel dominance in those very suspensive supercomputer marked.

► ASUS P8P67 Deluxe (BIOS 1305)

► 2600K @4.5GHz 1.27v , 1 hour Prime

► Silver Arrow , push/pull

► 2x2GB Crucial 1066MHz CL7 ECC @1600MHz CL9 1.51v

► GTX560 GB OC @910/2400 0.987v

► Crucial C300 v006 64GB OS-disk + F3 1TB + 400MB RAMDisk

► CM Storm Scout + Corsair HX 1000W

+

► EVGA SR-2 , A50

► 2 x Xeon X5650 @3.86GHz(203x19) 1.20v

► Megahalem + Silver Arrow , push/pull

► 3x2GB Corsair XMS3 1600 CL7 + 3x4GB G.SKILL Trident 1600 CL7 = 18GB @1624 7-8-7-20 1.65v

► XFX GTX 295 @650/1200/1402

► Crucial C300 v006 64GB OS-disk + F3 1TB + 2GB RAMDisk

► SilverStone Fortress FT01 + Corsair AX 1200W

There are two other reasons to drop the price on the 5870 to $300

- If the 480GTX comes in at close to its current price/performance to deny any signficant marketshare. If the 480GTX comes in at 5-10% higher performance than the 5870 would the average upgrader still choose it if the 5870 was 25% cheaper (probably not)?

- If AMD/ATI counters with a 5875 (or whatever 5870 rev2) at the same price point of the current card (to retain "performance crown"), the "old" 5870 wouldn't sell at all at the current price.

I'm inclined to say that its very likely AMD/ATI would price cut, I'd probably bank on it being closer to $50 though (I would be quite happy if it was $100).

Ati definietely has price flexibility right now.Dont forget that ATI prices have risen above launch msrp.They are cheaper to produce, they have them for months, and have had better yields from the start (6 months ago).

Thing is, if they will not feel threaten by fermi, they probably wont :/.MSRP for 5850 on launch was 259$ ,it stands at 300+ now.

Yep, exactly. These are very big deals in GPU evolution (if not revelation) right now. All GPUs have a HUGE amount of Gflps (compared to a CPU), but how do you control/program the beast to do something more useful than just gaming?

That dedicated L1 cache plays a big role for making the the life much easier to program/control the beast. This makes it possible to have a unified read/write cache, which allows program correctness and is a key feature to support generic C/C++ programs.

► ASUS P8P67 Deluxe (BIOS 1305)

► 2600K @4.5GHz 1.27v , 1 hour Prime

► Silver Arrow , push/pull

► 2x2GB Crucial 1066MHz CL7 ECC @1600MHz CL9 1.51v

► GTX560 GB OC @910/2400 0.987v

► Crucial C300 v006 64GB OS-disk + F3 1TB + 400MB RAMDisk

► CM Storm Scout + Corsair HX 1000W

+

► EVGA SR-2 , A50

► 2 x Xeon X5650 @3.86GHz(203x19) 1.20v

► Megahalem + Silver Arrow , push/pull

► 3x2GB Corsair XMS3 1600 CL7 + 3x4GB G.SKILL Trident 1600 CL7 = 18GB @1624 7-8-7-20 1.65v

► XFX GTX 295 @650/1200/1402

► Crucial C300 v006 64GB OS-disk + F3 1TB + 2GB RAMDisk

► SilverStone Fortress FT01 + Corsair AX 1200W

Fermi is a complete arch redesign, like g80 & r350. Focus on cache, compute, & gpgpu programmability. cypress is rv770 with alu's & rops doubled and scheduler & setup redesigned to effectively use new resources.

[SIGPIC][/SIGPIC]Bring... bring the amber lamps.

Intel i7-2700k@ 4.7ghz (46x102)

Asus P8Z68 Deluxe GEN3

G.Skill 2x4gb RipjawX 2133 11-11-11-30

GTX 680 1220/7000

Corsair TX750W

Razer Lachesis w/ Razer Pro|Pad

1x160gb Seagate HDD, 2x1tb Seagate HDD

LG 22" 226WTQ & BenQ G2400WD

Windows 7 Ultimate x64 SP1

Intel i7-2700k@ 4.7ghz (46x102)

Asus P8Z68 Deluxe GEN3

G.Skill 2x4gb RipjawX 2133 11-11-11-30

GTX 680 1220/7000

Corsair TX750W

Razer Lachesis w/ Razer Pro|Pad

1x160gb Seagate HDD, 2x1tb Seagate HDD

LG 22" 226WTQ & BenQ G2400WD

Windows 7 Ultimate x64 SP1

It's not a G71->G80 jump, but it's not a G92->GT200 one either

Not sure if this has been posted before. Card is supposedly the GTX470

Yeah, it's been posted

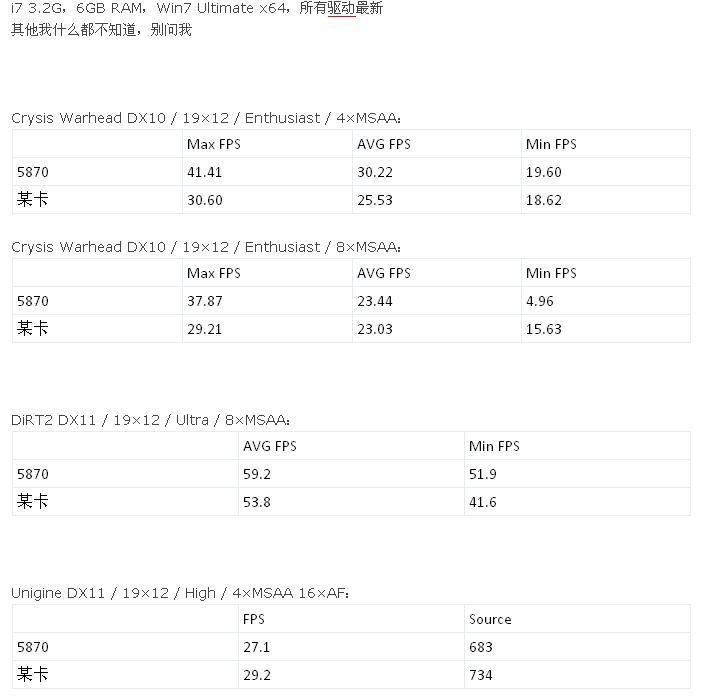

It's been posted, but I just checked anandtech's reviews and the 5870 gets 38.5 fps on average I presume, in warhead @19x12 4xAA Enthusiast... granted anandtech's setup has a faster i7 (+130MHz?) but I don't think that's enough for a ~25% increase in frames. I was trying to look for a review with 8x AA but found none atm.

edit:

of course it depends on the part of the game that was ran, but I was thinking they used the built-in benchmarking tool

Last edited by insurgent; 03-05-2010 at 03:54 PM.

Bookmarks