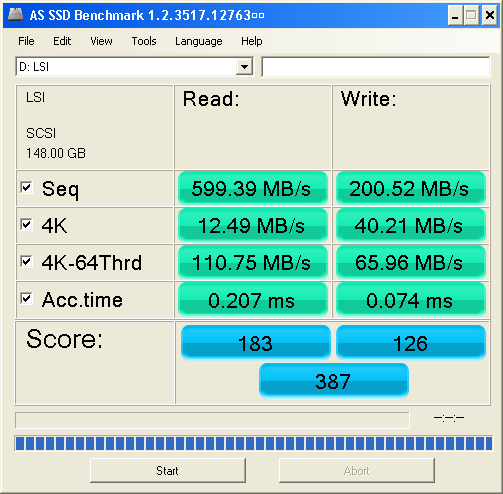

Hmm, from Tom's random file I/O tests, I don't find the card to be bad at all (either LSI card).

Do you realize 0.1ms results in >10000 IOps? And, Tom's charts show more than that.

http://media.bestofmicro.com/A/N/219...r_database.png

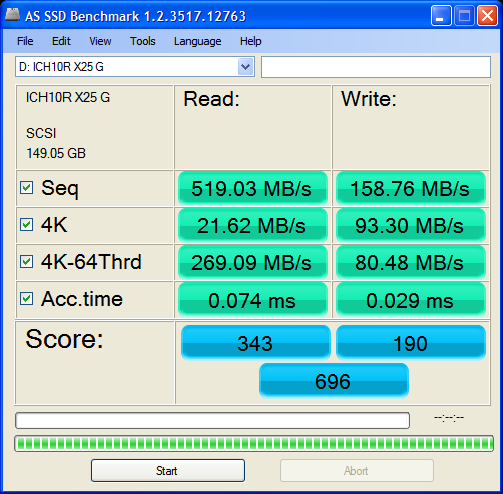

Now I don't see why you say the access time is correct rather

Reply With Quote

Reply With Quote

tch?

tch?

Bookmarks