i7 920@4.34 | Rampage II GENE | 6GB OCZ Reaper 1866 | 8800GT (zzz) | Corsair AX750 | Xonar Essence ST w/ 3x LME49720 | HiFiMAN EF2 Amplifier | Shure SRH840 | EK Supreme HF | Thermochill PA 120.3 | MCP355 | XSPC Reservoir | 3/8" ID Tubing

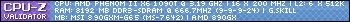

Phenom 9950BE @ 3400/2000 (CPU/NB) | Gigabyte MA790GP-DS4H | HD4850 | 4GB Corsair DHX @850 | Corsair TX650W | T.R.U.E Push-Pull

E2160 @3.06 | ASUS P5K-Pro | BFG 8800GT | 4GB G.Skill @ 1040 | 600W Tt PP

A64 3000+ @2.87 | DFI-NF4 | 7800 GTX | Patriot 1GB DDR @610 | 550W FSP

Particle's First Rule of Online Technical Discussion:

Particle's First Rule of Online Technical Discussion: Rule 2:

Rule 2: Rule 2A:

Rule 2A:

), I'd get it. I don't really care about power consumption, I have a 1250W power supply for a reason. I don't really care about heat either, that's what my watercooling loops are for.

), I'd get it. I don't really care about power consumption, I have a 1250W power supply for a reason. I don't really care about heat either, that's what my watercooling loops are for.

Bookmarks