FIVE TRILLION digits..

http://www.numberworld.org/misc_runs...nounce_en.html

FIVE TRILLION digits..

http://www.numberworld.org/misc_runs...nounce_en.html

Crunch with us, the XS WCG team

The XS WCG team needs your support.

A good project with good goals.

Come join us,get that warm fuzzy feeling that you've done something good for mankind.

thats XTREME

Crunch with us, the XS WCG team

The XS WCG team needs your support.

A good project with good goals.

Come join us,get that warm fuzzy feeling that you've done something good for mankind.

i would like to point out that his machine is still counting slower than our nation debt, lol

also, how do you compress a file with numbers that seem random?

double post

The XS thread for the y-cruncher app is here:

http://www.xtremesystems.org/forums/...d.php?t=221773

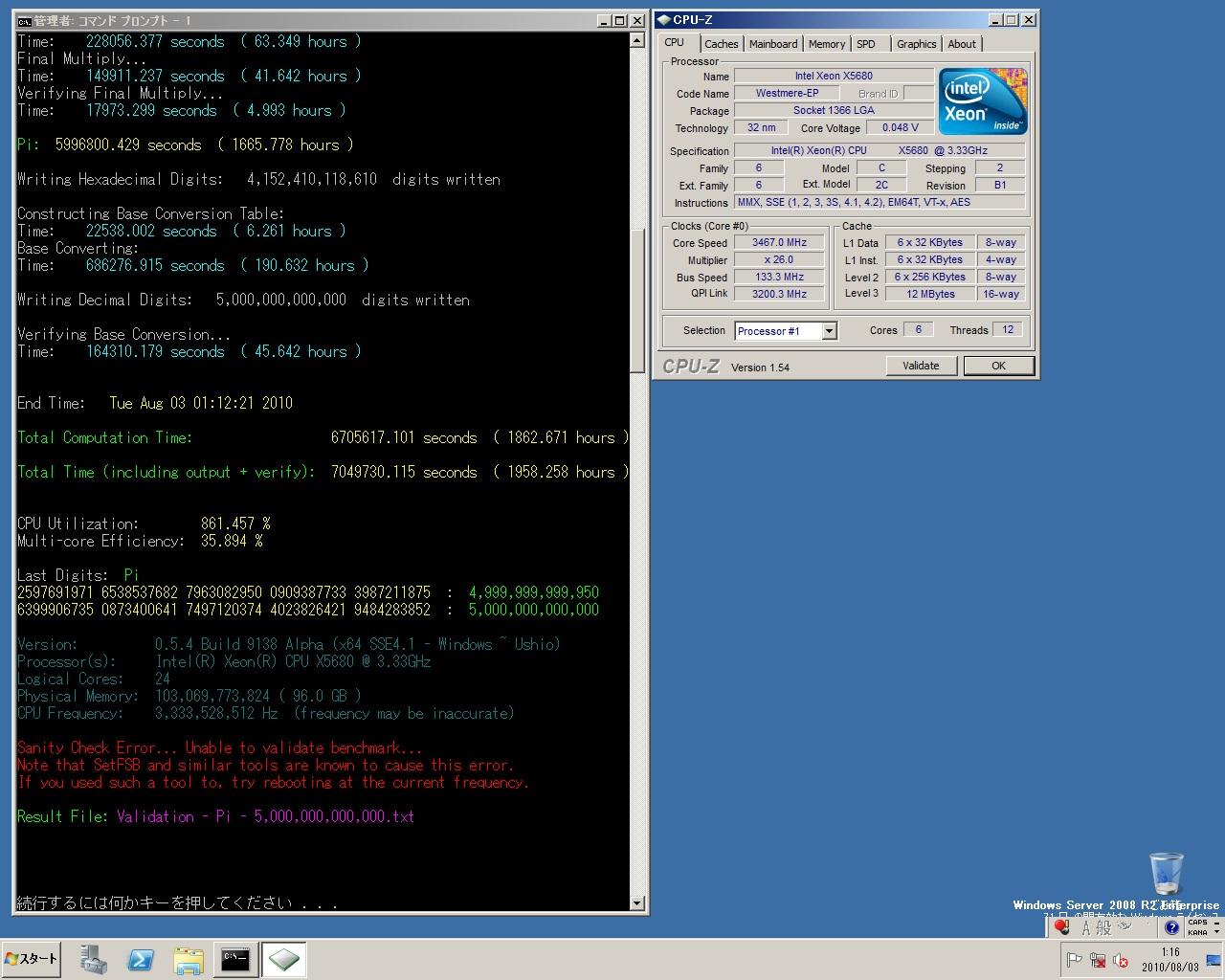

Here's a couple of screenshots from the run:

Ignore the sanity check error and the low CPU utilization % - they are artifacts from splitting the computation into multiple chunks.

Main Machine:

AMD FX8350 @ stock --- 16 GB DDR3 @ 1333 MHz --- Asus M5A99FX Pro R2.0 --- 2.0 TB Seagate

Miscellaneous Workstations for Code-Testing:

Intel Core i7 4770K @ 4.0 GHz --- 32 GB DDR3 @ 1866 MHz --- Asus Z87-Plus --- 1.5 TB (boot) --- 4 x 1 TB + 4 x 2 TB (swap)

Am I wrong but was this ran with development version(doing the calculation in multiple chunks and resuming?) of y-cruncher, and this is the ETA poke349 hinted at in y-cruncher thread?

i wonder how this program would run on an ibm power system. in the next half year i'll get my hands on a power7 system with fully equipped building blocks for evaluation purposes. i'd love to penetrate that beast with smth like that

Last edited by RaZz!; 08-02-2010 at 12:02 PM.

1. Asus P5Q-E / Intel Core 2 Quad Q9550 @~3612 MHz (8,5x425) / 2x2GB OCZ Platinum XTC (PC2-8000U, CL5) / EVGA GeForce GTX 570 / Crucial M4 128GB, WD Caviar Blue 640GB, WD Caviar SE16 320GB, WD Caviar SE 160GB / be quiet! Dark Power Pro P7 550W / Thermaltake Tsunami VA3000BWA / LG L227WT / Teufel Concept E Magnum 5.1 // SysProfile

2. Asus A8N-SLI / AMD Athlon 64 4000+ @~2640 MHz (12x220) / 1024 MB Corsair CMX TwinX 3200C2, 2.5-3-3-6 1T / Club3D GeForce 7800GT @463/1120 MHz / Crucial M4 64GB, Hitachi Deskstar 40GB / be quiet! Blackline P5 470W

Last edited by Vinas; 08-02-2010 at 12:27 PM.

Current: AMD Threadripper 1950X @ 4.2GHz / EK Supremacy/ 360 EK Rad, EK-DBAY D5 PWM, 32GB G.Skill 3000MHz DDR4, AMD Vega 64 Wave, Samsung nVME SSDs

Prior Build: Core i7 7700K @ 4.9GHz / Apogee XT/120.2 Magicool rad, 16GB G.Skill 3000MHz DDR4, AMD Saphire rx580 8GB, Samsung 850 Pro SSD

Intel 4.5GHz LinX Stable Club

Crunch with us, the XS WCG team

Antec 900

Corsair TX750

Gigabyte EP45 UD3P

Q9550 E0 500x8 4.0 GHZ 1.360v

ECO A.L.C Cooler with Gentle Typhoon PushPull

Kingston HyperX T1 5-5-5-18 1:1

XFX Radeon 6950 @ 880/1300 (Shader unlocked)

WD Caviar Black 2 x 640GB - Short Stroked 120GB RAID0 128KB Stripe - 540GB RAID1

http://digg.com/programming/Pi_World...rillion_Digits

guess this frenchman only got to hold the record for half a year..

http://www.physorg.com/news182067503.html

i7 920 C0 @ 3.5Ghz

Asus P6T Deluxe

3x2GB G.Skill DDR3 1600 9-9-9-24

HD4870x2

Intel x25-m G2 80GB

2x WD Caviar Black 640GB

Samsung Spinpoint F1 1TB

PC P&C 750W

I'm wondering how much time we could cut off of that with a pair of the same X5680's in a SR2 board running at close to 5GHz..

Crunch with us, the XS WCG team

The XS WCG team needs your support.

A good project with good goals.

Come join us,get that warm fuzzy feeling that you've done something good for mankind.

Yay I beat a record! My own! j/k

If you have enough hard drives and enough patience, you can pretty much do as much as you want - 10 trillion if you want.

Memory quantity only affects the speed, not the max # of digits.

ASCII is actually a pretty "wasteful" format to store digits. So this "compression" is just getting rid of that "waste".

But otherwise, you are correct. The digits won't compress at all beyond a certain point.

I wish... But standards don't change that easily.

If you can convince HWbot to take a look at y-cruncher, then there might be a chance... (I have added validation and internal checks to block timer-hack cheats for the purpose of benchmarking...)

But seeing as how MaxxMem (which even has a GUI and HWbot integrated validation) still doesn't award points after 7 months... lol

Yes, it's a development version. But now it's public. The ability to stop and resume, and break it up into chunks was added in v0.5.4 - which I've also released along with this record announcement.

So yes, I've been specifically waiting for this computation to finish to release v0.5.4.

EDIT: Shigeru Kondo and I had originally intended this to be a single contiguous run. But we had a hardware error occur roughly 8 days into the computation. (YES... we had a hardware error on NON-OVERCLOCKED hardware...)

The program detected the error but wasn't able to recover from it. So we had to kill the program and restart it from the last save point.

In all the computation was broken into only 2 chunks - before and after the computation error.

Right from the start, we both anticipated some sort of hardware failure to occur at some point (especially with 16 HDs...), so I added the ability to restart a computation - and are we glad I added that feature... lolz

As of right now, it won't run well because a large scale power7 server is NUMA and the program isn't optimized for it.

Also, I would need to specially re-optimize much of the low-level code for power7. (right now, a lot of it is hard-coded SSE2 - SSE4.1 which power7 doesn't have)

I can get it compile on almost anything if I disable all the SSE and all x86/x64-specific stuff, but that also kills the efficiency...

My workstation/dev-station has 64GB(see siggy). It can do a 12,000,000,000 digit run all in ram. I use this machine to write and test this program.

But since the program can use the disk(s), the quantity of ram have little affect on the max # of digits you can do. It only affects the computation speed...

I'm not sure it will help at all.

At these sizes, only 3 things seem to matter: (in order of most to least important)

- Disk Bandwidth

- Memory Quantity

- Memory Bandwidth

The first one can easily be fixed by cramming in as many hard drives as you can. (which is exactly what we did: 16 x 2TB 7200RPM Seagates for 2GB/s sequential bandwidth)

But the latter two will be limited by the hardware.

The SR-2 only supports up to 48 GB of ram.

So :

2 x X5680 @ 3.33 GHz + 96 GB ram

will probably still beat

2 x X5680 @ 5 GHz + 48 GB ram

It might be possible to pull ahead if you aggressively overclock the ram and throw in even more hard drives... but getting it stable for 90 days is a different matter.

Last edited by poke349; 08-02-2010 at 05:35 PM.

Main Machine:

AMD FX8350 @ stock --- 16 GB DDR3 @ 1333 MHz --- Asus M5A99FX Pro R2.0 --- 2.0 TB Seagate

Miscellaneous Workstations for Code-Testing:

Intel Core i7 4770K @ 4.0 GHz --- 32 GB DDR3 @ 1866 MHz --- Asus Z87-Plus --- 1.5 TB (boot) --- 4 x 1 TB + 4 x 2 TB (swap)

how does hard drive speed limit this? it took 2 months to fill up a few drives

At this size (or any size that doesn't fit in ram), you need to do arithmetic on the disk itself. So in some sense, it uses the disk as memory.

And since disk speed is really slow (~100 MB/s per disk vs. ~30 GB/s for 2 x i7 bandwidth) it immediately becomes a limiting factor if you try to use the disk like ram.

Main Machine:

AMD FX8350 @ stock --- 16 GB DDR3 @ 1333 MHz --- Asus M5A99FX Pro R2.0 --- 2.0 TB Seagate

Miscellaneous Workstations for Code-Testing:

Intel Core i7 4770K @ 4.0 GHz --- 32 GB DDR3 @ 1866 MHz --- Asus Z87-Plus --- 1.5 TB (boot) --- 4 x 1 TB + 4 x 2 TB (swap)

i thought it would work on a few million numbers at a time, save them off and continue on.

congrats! a new benchmark ey? and more stressful than prime95? ill give it a try!

thanks poke for all the work you put into this, great stuff!

if what you need is disk bandwidth and not disk capacity, then why arent you using ssds?

if you use several pciE ssds you should be able to double or quadruple that bandwidth

if cost is an issue, contact ocz or other hw manufacturers, they might actually be interested...

Congrats! Wondering how one of my work rigs would stack up to this. Might tool around with it later. Any chance of a Linux binary to run instead of Windows?

This is amazing stuff, but it is more amazing that Pi is over 2000 years old and we can't calculate the exact value of Pi yetnot even after FIVE TRILLION digits. It means our knowledge and understanding of the logic behind the circle is still very primitive, indeed.

Last edited by Sam_oslo; 08-02-2010 at 08:00 PM.

► ASUS P8P67 Deluxe (BIOS 1305)

► 2600K @4.5GHz 1.27v , 1 hour Prime

► Silver Arrow , push/pull

► 2x2GB Crucial 1066MHz CL7 ECC @1600MHz CL9 1.51v

► GTX560 GB OC @910/2400 0.987v

► Crucial C300 v006 64GB OS-disk + F3 1TB + 400MB RAMDisk

► CM Storm Scout + Corsair HX 1000W

+

► EVGA SR-2 , A50

► 2 x Xeon X5650 @3.86GHz(203x19) 1.20v

► Megahalem + Silver Arrow , push/pull

► 3x2GB Corsair XMS3 1600 CL7 + 3x4GB G.SKILL Trident 1600 CL7 = 18GB @1624 7-8-7-20 1.65v

► XFX GTX 295 @650/1200/1402

► Crucial C300 v006 64GB OS-disk + F3 1TB + 2GB RAMDisk

► SilverStone Fortress FT01 + Corsair AX 1200W

Bookmarks