Originally Posted by

supremelaw

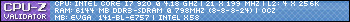

I'm thinking back to several discussions we all had

when the Gigabyte i-RAM Box was released with a SATA-I

interface:

Many of us were saying that "RAM should SATURATE the SATA interface".

In other words, using any RAM regardless of age (DDR, DDR2, DDR3)

should automatically increase the transfer speeds in a manner

proportional to the interface speed e.g. from 150 to 300 MB/second.

Then, along those same lines, we expected that

running 2 such devices in RAID 0 should nearly

double throughput.

What was missing from those discussions, imho,

was a realization that each end of a SATA cable

is controlled by firmware, software and harware logic

which varies considerably in efficiency, but we haven't

developed very good tools to isolate measurements

of that particular effiency.

Just to illustrate with a very simple example,

we scaled an inexpensive 16GB Super Talent SSD

from 1 to 2 drives, the latter in RAID 0, and

the "raw reads" increased from 130 to 150MB/second --

not even close to a linear scaling result.

The controller is the Highpoint RocketRAID 2340

using x8 PCI-Express lanes in an ASUS P5W64 WS Professional.

And, using these SSDs eliminated seek times and rotational

latencies inherent in conventional rotating disk drives.

So, what is the cause of the "penalty" that prevented linear

scaling from 130 to 260 MB/second with that simple configuration?

If RAM is truly "random access" -- as it should be --

the quantity of RAM present should have no significant

effect on the transfer rates in either direction (read or write).

What needs to happen, in my professional opinion,

is a 4-port SATA/6G ramdrive that is designed -- in advance --

with logic at both ends of the SATA cables that scales efficiently

i.e. in near-linear fashion.

I'm not asking for 100% perfection here, OK?

However, there are plenty of measurements already

reported on the Internet of 4 x i-RAMs in RAID 0

(e.g. see youtube.com for video illustrations).

I think it is reasonable to accept 10-20% raw overhead

from 4 such ramdisks wired to Intel's ICH10R, provided

that the logic overhead inside the ramdisks is not any larger

than that.

Thus, using Intel's latest single SATA/3G SSD,

250/300 = 83.3% or ~17% overhead.

Allowing that overhead to reach 20%, we should be able to

design and manufacture controllers at each end of the SATA cables

that achieve the following scaling with SATA/3G interfaces:

300 MB/second x 4 devices x 0.80 efficiency = 960 MB/second

And, we should also expect linear scaling with the arrival of SATA/6G:

960 x 2 = 1,920 MB/second

Anything less than these target rates would indicate

controller logic with efficiencies that are unnecessarily

inferior.

Sincerely yours,

/s/ Paul Andrew Mitchell, Inventor and

Systems Development Consultant

All Rights Reserved without Prejudice

Reply With Quote

Reply With Quote

Why produce a device that can can take advantage of such high bandwidth such as DDR2, then limit it through crappy interface design?

Why produce a device that can can take advantage of such high bandwidth such as DDR2, then limit it through crappy interface design?

. WHen I was shopping for a motherboard for my new computer, this was a factor in my choices. Sure, using a 1x pcie wasn't a limitation 2+ years ago, but it will be in the future.

. WHen I was shopping for a motherboard for my new computer, this was a factor in my choices. Sure, using a 1x pcie wasn't a limitation 2+ years ago, but it will be in the future.

Bookmarks