just wondering if you guys think that this is accurate:

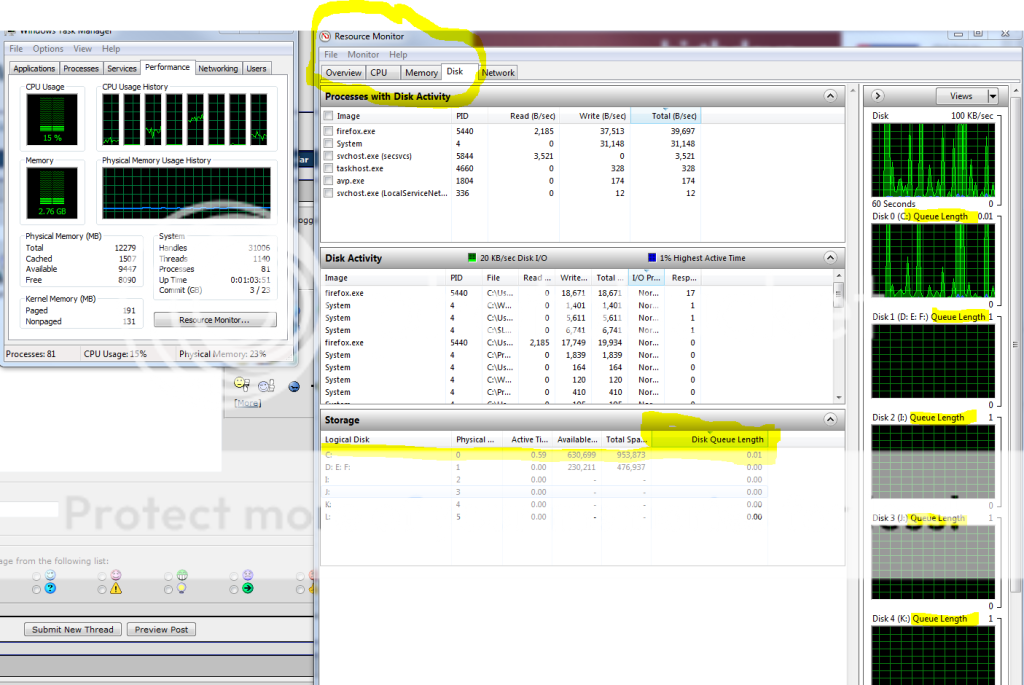

i have used this quite a bit, of course the disk queue number fluctuates. however, it rarely goes over one. unless i am benchmarking of course.

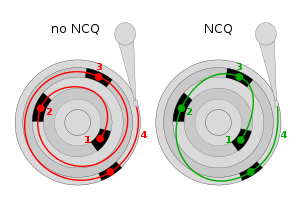

howeve4r, i think that shows that it gives accurate numbers. when loading games, it never goes above 1 or at least very rarely. this makes me wonder if the operating system is being fed info at a fast enough rate that maybe it doesnt stack queue depths? because the operating system or file system handles the queue depth, so that would not be a static number, or am i incorrect in that?

i dunno just thoughts. maybe it isnt accurate at all. i think it is though.

Reply With Quote

Reply With Quote

i think it might be specific to whatever game is running. how is queue depth figured though? what controlls it? the program or the OS? because if the operating system is assigning queue depth based upon the needs of the OS then i have theories...

i think it might be specific to whatever game is running. how is queue depth figured though? what controlls it? the program or the OS? because if the operating system is assigning queue depth based upon the needs of the OS then i have theories...

Bookmarks