Here's my little experiment... I have no idea how many people will have the patience to do something like this.But here it goes...

This is the same program as the one here:

http://www.xtremesystems.org/forums/...d.php?t=221773

Download:

http://www.numberworld.org/y-cruncher/

but this time... it's all about disk bandwidth.

Starting from version 0.5.2, there's a new feature that will let you use the disk to perform gigantic computations that would otherwise not fit in ram.

Given the memory-bound nature of computing Pi, such computations will be extremely limited by disk bandwidth - hence a hard drive benchmark.

Warning... The use of SSDs is NOT recommended.

The sheer number of writes that this program will subject your disks to can rapidly wear out SSDs.

I actually don't know how true this is... But the I/O meter scares the hell out of me... 1 TB of writes for just 10 billion digits.

These benchmarks will last several hours - at the least...

So run them overnight if you don't want to wait them through...

Be aware that the larger benchmarks may take days...

Since it's still a "computation", about 5 - 50% of the benchmark will still be CPU limited depending on the hardware. So a modest, but stable, overclock will help.

Most setups will be less than 20% limited by CPU. 50% is very rare - you basically need to have a ton of ram and a FusionIO drive to achieve that...

Here's how it works: (Since this is entirely experimental, there's no special option for it. So bare with me.)

Step 1: Download and run the program.

Step 2: Select option 3. (Custom Compute a Constant)

Step 3: Select option 3. (Decimal Digits)

Step 4: Enter the # of digits you would like to compute and hit ENTER. (e.g. 10,000,000,000 digits, but without the commas)

Step 5: Select option 8. (Computation Mode)

Step 6: Select option 3. (Advanced Disk Swapping)

*You must have selected at least 100,000,000 digits in step 4 for this option to appear.

Step 7: Select option 12: (Memory Needed)

Step 8: Enter the amount of memory you wish to use. The more the better.

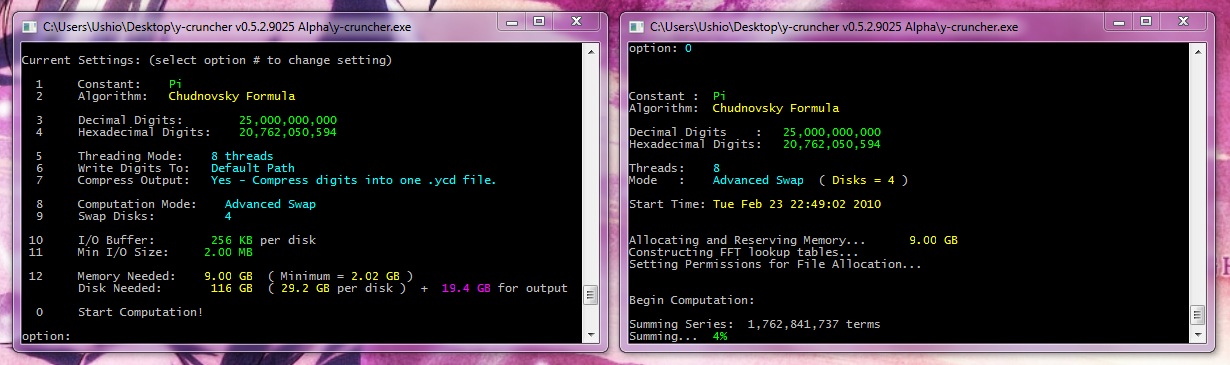

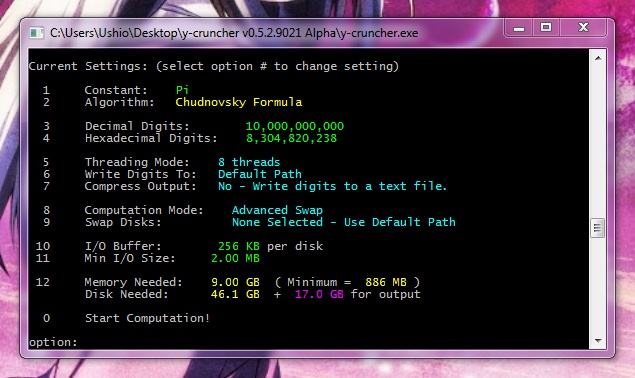

The menu should now look something like this:

Step 9: Select option 0: (Start Computation!)

First thing you'll notice is that the beginning is VERY CPU limited. This can last anywhere from a minute to more than an hour depending on the size of the computation as well as your hardware.

That's because there's nothing for the program to write to disk yet. It needs to do "enough work" before it runs out of memory and has to write to disk. The more ram you have, the longer this CPU-limited phase will be. (same goes with a slow CPU)

If your ram selection is almost as large as your computation (i.e. 9 GB of ram for 10 billion digits), then this CPU-limited phase will probably end up being the first 70% of the series...

If this becomes a problem (since it's too cpu-limited to be a HD benchmark), then I can add a new rule specifying a minimum digit/memory ratio.

Once it gets past this phase, you will slowly start to see it use disk. And more and more of it... To the point where it will be spending most of its time doing I/Os.

There will still be portions of time where it will be CPU-limited, but it should be nothing like the beginning.

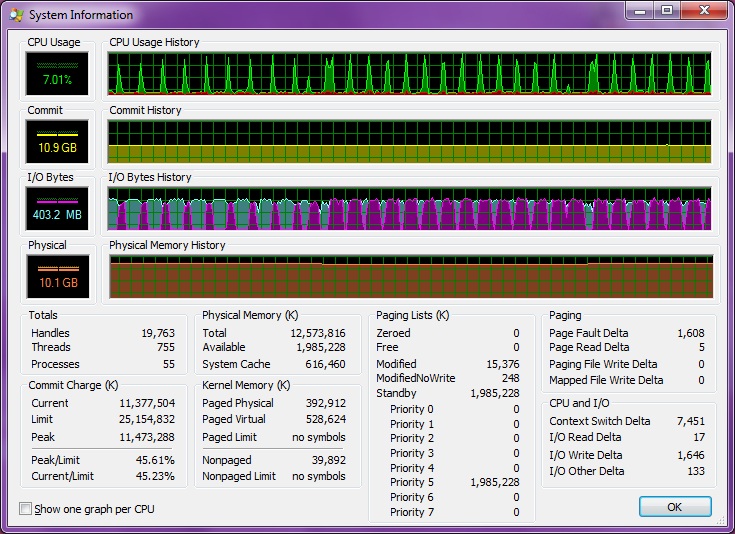

Here's a Process Explorer screenshot of a disk-limited portion of a large computation:

Even 400 MB/s of bandwidth isn't enough to keep up with the CPU. (a single Core i7 @ 3.5 GHz)

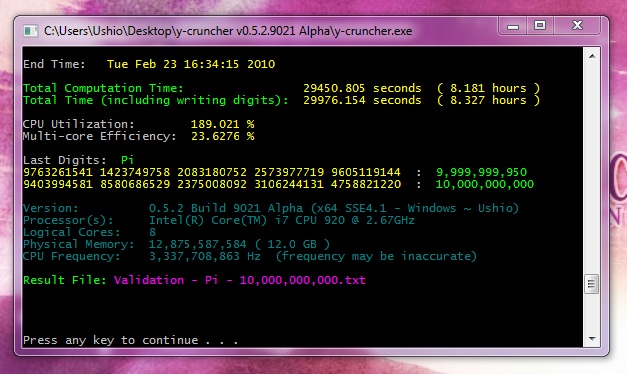

And when it's finally done...

8 hours on Core i7 @ 3.5 GHz with a nearly full 1.5 TB Seagate - (~about 80 MB/s)

- Use the current entries in the score table below to guesstimate your run time before you actually run it. You don't wanna start a computation that will take days if you think it'll finish overnight...

- A 100 billion digit run on a Core i7 @ 3.5 GHz with 4 x 2TB Hitachi HDs takes more than 2 days... hence what I mean by "for the EXTREMELY patient".

- You can use option 9 (Swap Disks) to specify multiple hard drives for increased bandwidth. This is similar to raid 0 - but it allows unlimited drives.

- Unless you can come up with more than 2 GB/s of sustained disk bandwidth, it will mostly be disk-limited.

- And for obvious reasons, you will not get anymore speedup once your combined disk bandwidth exceeds your memory bandwidth...

Rules:

- As long as you are computing Pi using "Advanced Swap", you are free choose your own options.

- To prevent the use of ram drives, your selected memory + disk MUST be greater than your physical memory.

- Post a screenshot of the run as well as the contents of the validation file that it generates.

You actually need the entire validation file to verify a computation, but this is just an experiment. So a screenshot is enough.I seriously doubt anyone would be low enough to cheat.

Tips:

- Use as many hard drives as you can. Disk bandwidth will scale with the # of hard drives you use. But they will still be bottlenecked by the slowest drive.

- Play with your raid 0 settings. If raid 0 is able to scale your bandwidth linearly, then stick with it. If not, then separate your HDs and let the program manage them separately.

- Use as much ram as you can, but leave a bit for the OS. (with 12GB, I give the program about 9 - 10GB. With 64GB I give it 60GB.)

The more ram you give it, the less often it will need to access the disk, and the more sequential the accesses will be.- Move the pagefile off the drives which you will crunch on.

- Empty and freshly formatted drives tend to be faster.

- Unlike raid 0, there is no limit to the number of drives the program will let you use. So if you have a lot of drives lying around (and are similar in speed), feel free to go all-out.

- There is still a portion of the benchmark that is CPU-bound. (Especially the beginning.) So a modest overclock will always help.

Versions of different speeds are color-coded accordingly:

Yellow = v0.5.2, first version with Advanced Swap Mode

Color-codes for different class of systems:

Green = Single-Processor

Purple = 2 Processors

Red = 4 Processors

Underline = Not Overclocked

I'll be syncing this table with the Advanced Swap computations in the other thread.

----------------------------------------------------------------------------------------------------------------------------------------------------------------

Benchmarks

1,000,000,000 digits:

7139868209 3196353628 2046127557 1517139511 5275045519 : 1,000,000,000

Required Disk: 4.79 GB

1.976 hours - v0.5.2 x86 SSE3 - poke349 - Intel Pentium D 920 @ 2.8 GHz - 3 GB DDR2 (Ram = 1.89 GB, HDs = 160 GB WD)

2,500,000,000 digits:

9228502005 4677489552 2459688725 5242233502 7255998083 : 2,500,000,000

Required Disk: 11.5 GB

5,000,000,000 digits:

0914971996 1298184401 9216126684 9425834935 5440797257 : 5,000,000,000

Required Disk: 23.0 GB

10,000,000,000 digits:

9403994581 8580686529 2375008092 3106244131 4758821220 : 10,000,000,000

Required Disk: 46.1 GB

2.953 hours - v0.5.2 x64 SSE4.1 ~ Ushio - poke349 - Intel Core i7 920 @ 3.34 GHz (3.5 GHz Turbo Boost) - 12 GB DDR3 (Ram = 9.00 GB, HDs = 4 x 2 TB Hitachi)

8.181 hours - v0.5.2 x64 SSE4.1 ~ Ushio - poke349 - Intel Core i7 920 @ 3.34 GHz (3.5 GHz Turbo Boost) - 12 GB DDR3 (Ram = 9.00 GB, HDs = 1.5 TB Seagate)

21.394 hours - v0.5.2 x64 SSE4.1 ~ Ushio - poke349 - Intel Core i7 720QM @ 1.6 GHz (1.73 GHz 4-core Turbo Boost) - 6 GB DDR3 (Ram = 3.00 GB, HDs = 500 GB Seagate)

38.276 hours - v0.5.2 x86 SSE3 - poke349 - Intel Pentium D 920 @ 2.8 GHz - 3 GB DDR2 (Ram = 1.84 GB, HDs = 160 GB WD)

25,000,000,000 digits:

1309759846 5364560010 7388984278 8403481193 9913806533 : 25,000,000,000

Required Disk: 116 GB

9.591 hours - v0.5.2 x64 SSE4.1 ~ Ushio - poke349 - Intel Core i7 920 @ 3.34 GHz (3.5 GHz Turbo Boost) - 12 GB DDR3 (Ram = 9.00 GB, HDs = 4 x 2 TB Hitachi)

14.800 hours - v0.5.2 x86 SSE3 - poke349 - Intel Core i7 920 @ 3.34 GHz (3.5 GHz Turbo Boost) - 12 GB DDR3 (Ram = 1.84 GB, HDs = 4 x 2 TB Hitachi)

40.720 hours - v0.5.2 x64 SSE4.1 ~ Ushio - poke349 - Intel Core i7 920 @ 3.34 GHz (3.5 GHz Turbo Boost) - 12 GB DDR3 (Ram = 9.00 GB, HDs = 1.5 TB Seagate) (sub-optimal buffer settings)

50,000,000,000 digits:

6599559400 3447556105 3766739199 8513398712 7510930042 : 50,000,000,000

Required Disk: 233 GB

22.343 hours - v0.5.2 x64 SSE4.1 ~ Ushio - poke349 - Intel Core i7 920 @ 3.34 GHz (3.5 GHz Turbo Boost) - 12 GB DDR3 (Ram = 9.00 GB, HDs = 4 x 2 TB Hitachi)

100,000,000,000 digits:

9536515199 6948432428 3185077669 0674614692 0191295669 : 100,000,000,000

Required Disk: 467 GB

40.025 hours - v0.5.2 x64 SSE4.1 ~ Ushio - Shigeru Kondo* - Intel Core i7 975 @ 4.00 GHz - 12 GB DDR3 (Ram = 11.00 GB, HDs = 8 x 1 TB)

53.665 hours - v0.5.2 x64 SSE4.1 ~ Ushio - poke349 - Intel Core i7 920 @ 3.34 GHz (3.5 GHz Turbo Boost) - 12 GB DDR3 (Ram = 9.00 GB, HDs = 4 x 2 TB Hitachi)

I'll extend the sizes as needed... The program will go up to 10 trillion digits for now... (2.7 trillion is the current world record)

*Shigeru Kondo is the actual name of the person. Not a screen name. He does not have an account on XtremeSystems.

Reply With Quote

Reply With Quote

Bookmarks