Some people really have nothing better to do than to stir up some fanbois on the net :P

Some people really have nothing better to do than to stir up some fanbois on the net :P

That's when you're dealing with a traditional core with one FPU and one integer unit.

Also, do traditional cores have a single scheduler for the integer plus FPU?

What I'm talking about is, if you have a 256-bit AVX instruction, could AMD just make it so it just registers as using one of the two cores in the module? even though it needs to use both the 128-bit FPU pipelines (which belong to two cores)

So if the OS sees the 256-bit AVX instruction as just being processed by core 1, then it allows core 2 to process integer instructions

Last edited by Apokalipse; 05-12-2011 at 04:40 AM.

No I don't think so. Both Core 1 and 2 would be in use (technically).

(╯°□°)╯︵ ┻━┻

currently has .2748428927348 internetscreate your own rules to find the answer

@Apokalipse, Dolk:

Nice to see at least some technical discussion going on, other than analyzing the wave of faked BD screenshots ^^

To your discussion:

Well, after providing 2 instruction streams to a BD module, the OS is kind of out of the loop of executing them.

The fetch/decode/dispatch path works on 2 threads, capable of switching between them on a per-cycle-basis. The dispatch unit dispatches groups of "cops" (complex ops, AMD term) belonging to either thread (I hope, my English skills aren't killing the message here). Such a dispatch group might contain ALU, AGU and FP ops of one thread. I assume the FP ops or the according dispatch group have a tag denoting their respective thread.

From now on the integer and the FP schedulers are kind of independently working on the cops/uops they received, until control flow changes. The FP scheduler "sees" a lot of uops asking for ready inputs and producing outputs. Except for control flow changes it actually doesn't even need to know to which thread an op belongs to. The thread relation is assured by a register mapping (has to be done for OOO execution anyway). So the FPU could execute uops as their inputs become available.

If there are 256b ops, they're already decoded as 2 uops ("Double decode" type) to do calculations on both 128b wide halves of the SIMD vector. I don't think that there is an explicit locking mechanism. The FPU could basically issue both uops in the same cycle or - if there are ready uops of the other thread - issue them to one 128b unit during 2 consecutive cycles (the software optimization manual show latencies of 256b ops supporting this). This actually depends on the implemented policy: issue uops based on their age only or issue them ensuring balanced execution of both threads.

But what's important to your discussion: this happens independently from both integer cores.

Thanks for nice explanation Dresden!

Hopefully not too long till we will be able to torture this design with variety of workloads and see how it performs. Also I think OS scheduling will be quite important for overlay CPU performance due to module based nature of it, but that's the same for any HT enabled CPU.

Overlay I hope AVX performance won't be that far off 4 core SB while SSE should be higher. Now we just need some AVX enabled apps to start rolling out in bigger quantities.

RiG1: Ryzen 7 1700 @4.0GHz 1.39V, Asus X370 Prime, G.Skill RipJaws 2x8GB 3200MHz CL14 Samsung B-die, TuL Vega 56 Stock, Samsung SS805 100GB SLC SDD (OS Drive) + 512GB Evo 850 SSD (2nd OS Drive) + 3TB Seagate + 1TB Seagate, BeQuiet PowerZone 1000W

RiG2: HTPC AMD A10-7850K APU, 2x8GB Kingstone HyperX 2400C12, AsRock FM2A88M Extreme4+, 128GB SSD + 640GB Samsung 7200, LG Blu-ray Recorder, Thermaltake BACH, Hiper 4M880 880W PSU

SmartPhone Samsung Galaxy S7 EDGE

XBONE paired with 55''Samsung LED 3D TV

new article at AMDblog about states (C6)

http://blogs.amd.com/work/2011/05/16...AMD+at+Work%29

ROG Power PCs - Intel and AMD

CPUs:i9-7900X, i9-9900K, i7-6950X, i7-5960X, i7-8086K, i7-8700K, 4x i7-7700K, i3-7350K, 2x i7-6700K, i5-6600K, R7-2700X, 4x R5 2600X, R5 2400G, R3 1200, R7-1800X, R7-1700X, 3x AMD FX-9590, 1x AMD FX-9370, 4x AMD FX-8350,1x AMD FX-8320,1x AMD FX-8300, 2x AMD FX-6300,2x AMD FX-4300, 3x AMD FX-8150, 2x AMD FX-8120 125 and 95W, AMD X2 555 BE, AMD x4 965 BE C2 and C3, AMD X4 970 BE, AMD x4 975 BE, AMD x4 980 BE, AMD X6 1090T BE, AMD X6 1100T BE, A10-7870K, Athlon 845, Athlon 860K,AMD A10-7850K, AMD A10-6800K, A8-6600K, 2x AMD A10-5800K, AMD A10-5600K, AMD A8-3850, AMD A8-3870K, 2x AMD A64 3000+, AMD 64+ X2 4600+ EE, Intel i7-980X, Intel i7-2600K, Intel i7-3770K,2x i7-4770K, Intel i7-3930KAMD Cinebench R10 challenge AMD Cinebench R15 thread Intel Cinebench R15 thread

Isn't that basically the same info that was already posted back in Feb. ?

Yes, but one interesting thing stood out for me:

I wonder how's that estimated, what's the best case scenario here. Possibly a 12 core Opteron (what are they idling at?), compared to a .... any Bulldozer with all but one module sleeping?With the new “Bulldozer” core, when both cores in a module are idle for a pre-determined period of time, the power is gated off to the module. Our design engineers estimate that this will drop the power consumption by up to 95% in idle over the previous generation of processor cores

Another interesting bit about the article is JF commenting on someone and thus also confirming turboboost over all cores are mostly related to non-fpu workloads. e.g. all integer applications will benifit from (at least) 500MHz higher clock. (~16% extra performance through turboost). Most people already speculated the turbo was related to the fpu but now it is somehwat confirmed.

Wonder if that is anything like the Bobcat power draw I saw happening at idle...

The ASRock E350M-1 with the iGPU enabled would drop down to around only 0.836V when idle! Load was as high as 1.36V, but that wasn't ever constant either. This was all while running with C'n'Q OFF and Windows Power Plan set to Min-100% and Max-100% on CPU, so 0.8xxV at full speed (1600MHz) for dual core o_0

On the Sapphire IPC-E350M1 I received, the iGPU was bad in some wayThe system had corrupted graphics immediate at POST, and would lock up on even the BIOS logo. Tossing in a discrete card and hastily turning off the iGPU in the BIOS (before it crashed) rectified the issue, which BTW a BIOS update didn't fix either. Anyways, point of that is the fact the iGPU is disabled and Sapphire's method completely turns it off, compared to ASRock which seems to just... I dunno... remove it's device ID so Windows doesn't detect it? Same situation as above, the idle voltage with no iGPU is something like 0.58V!! Again, still at 1600MHz, just idling in Windows (with programs open, not just after a fresh boot).

The other thing is the voltage is not static, but fluctuates between specific voltages based on load. I had seen basically everything between the .5V to 1.35V (on the Sapphire) in 0.1V increments, with the typical range being around 1.15V to 1.25V for your every-day web browsing. Essentially what seemed to be occuring was the system automatically switching on/off power phases, like what a number of motherboards now do. Granted, what I saw might be exclusive to AMD's mobile line, but I have a feeling we'll see close to the same results with most/all the Bulldozer platforms too. Hell that might be one the main reason for 990FX!

[/rambling]

You might want to measure the voltages with a Voltometer, if possible on that board, cause I don't think going as low as you describe is possible with silicone based semiconductors, but I might be wrong.

I'd be more than happy to, but I'm not entirely sure where I'd need to measure at :\ I have no qualms about poking around, even at the small SMD caps, but if I took that approach it'd literally take me a week to get lucky enough to find it lol I'll drag out my spare PSU and HDD, boot the board and probe around the major components (MOSFETs to start).

To expand on this bit of info, I found this when looking for hints on 'Dozer pricing with Google lol It's from a 20 Questions AMD blog, from Aug 2010...

It seems as though we won't really be able to utilize a Bulldozer chip to it's full extent until Windows 8Originally Posted by John Fruehe@AMD[B

I'm no coder, but I have a feeling it's not going to be as easy a thing to do to make Windows 7 capable of that where M$ can just roll out a 50MB patch, but I'll cross my fingers just in case!!

EDIT: JUST in case there was any confusion still, here are some comments he posted from the same Blog entry:

"John Fruehe September 13, 2010

A module will have 2 cores in it. It will be seen by the hardware and software as 2 cores. The module will essentially be invisible to the system."

"John Fruehe September 8, 2010

We have already said one core per thread, period. But a single thread gets all of the front end, all of the FPU and all of the L2 cache if there is not a second thread on the module."

Last edited by Formula350; 05-17-2011 at 04:59 PM.

dunno

I think on launch 150euro; and the crosshair V on 180-200euro.

Sabertooth must cost 30-50euro less than the CH V otherwise nobody will prefer it to a CH V.

But I think Sabertooth it will be very interesting looking the specs

-sorry for my basic english

EDIT

posted here http://www.xtremesystems.org/forums/...72#post4851872

Last edited by liberato87; 05-18-2011 at 02:45 AM.

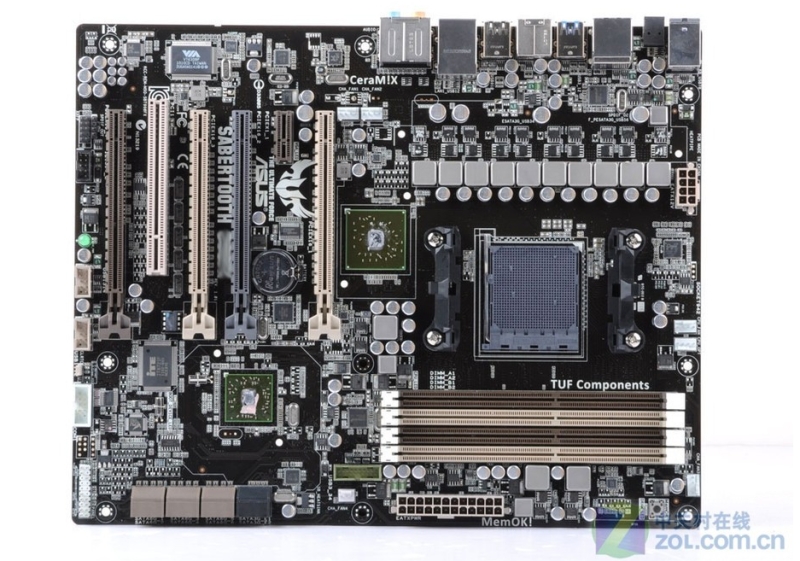

Hmm, that isn't the TUF model shown in the leaked roadmap, unless they plan on having a TUF Armored and Non-TUF model o_0 I was kinda digging that P67 model heh

EDIT: OK I guess technically it's a TUF, but I think everyone would consider a TUF Sabertooth to be synonymous with an Armored board :\

Looks like the same image posted on page 14 or 15

EDIT: Dug up a better pics

Can anyone explain the function of the second oval crystal location next to the SB? Seems like all the AMD boards have two (at least the 4 I currently have, 890GX/FX and two E350), one being unpopulated... That for use if the manufacturer wants to offer the ability to adjust the SB's clock itself?

Also somewhat disconcerting (IMO) is the SB heatsink looking plastic haha Does look to come in contact with whatever that other chip is, above the cap/crystal that sit to the right of the black SATA ports...

Last edited by Formula350; 05-18-2011 at 10:18 AM.

Jetway(Magic-pro) has one also

http://vr-zone.com/articles/jetway-s...aks/12269.html

Had Jetway put a PCIe x1 above the first PEG slot and put on right-angle SATA ports, that'd be a really nice entry level 990X board I think.

EDIT: Though one redeeming point in my eyes is that mini-PCIe slot! I'm becoming a real fan of those as it gives you the ability to run WiFi, cheaply, and not need a full sized ATX card. Which on this board would not be feasible if running Crossfire/SLI as the x1 and PCI slots would all be blocked (the second x1 wouldn't technically, but putting a card there might impede airflow).

Last edited by Formula350; 05-18-2011 at 08:10 PM.

With most of the cases I have used I like the placement of the SATA ports. They are above the Graphics card and I always have problems with access to the angled ports.

~1~

AMD Ryzen 9 3900X

GigaByte X570 AORUS LITE

Trident-Z 3200 CL14 16GB

AMD Radeon VII

~2~

AMD Ryzen ThreadRipper 2950x

Asus Prime X399-A

GSkill Flare-X 3200mhz, CAS14, 64GB

AMD RX 5700 XT

I agree with you Charged!

For someone who builds a machine and then never touches it again the angled ports usually result in a cleaner install, but for folks like us (most XS members) the angled SATA ports are a PITA.

It's not my deciding factor when picking a board, but I'm not a big fan of the angled ports...

They make swapping gear alot more difficult most of the time.

AMD FX-8350 (1237 PGN) | Asus Crosshair V Formula (bios 1703) | G.Skill 2133 CL9 @ 2230 9-11-10 | Sapphire HD 6870 | Samsung 830 128Gb SSD / 2 WD 1Tb Black SATA3 storage | Corsair TX750 PSU

Watercooled ST 120.3 & TC 120.1 / MCP35X XSPC Top / Apogee HD Block | WIN7 64 Bit HP | Corsair 800D Obsidian Case

First Computer: Commodore Vic 20 (circa 1981).

It quite early info, but still official and very confusing and:

http://www.anandtech.com/show/2881

AMD refers to the module as being two tightly coupled cores, which starts the path of confusing terminology. A few of you wondered how AMD was going to be counting cores in the Bulldozer era; I took your question to AMD via email:

Also, just to confirm, when your roadmap refers to 4 bulldozer cores that is four of these cores:

http://images.anandtech.com/reviews/.../bulldozer.jpg

Or does each one of those cores count as two? I think it's the former but I just wanted to confirm.

AMD responded:

Anand,

Think of each twin Integer core Bulldozer module as a single unit, so correct.

As said before, maybe AMD is happy to begin with a dual (two modules), tricore (three modules, one inactive) and a quadcore (4 modules) bulldozer and see the extra integer cores as "extra execution power" like Intel's Hyperthreading technology.

Interlagos dual 4 module MCM cpu will be a server Opteron part, but maybe also the hexacore (two Bulldozer dies with one inactive module) and eightcore (two fully Bulldozer dies).

Much talks for it, and much against it! One month left to go.

Ivy Bridge 3770K @ ????MHz

6c Intel Xeon X7460 24MB cache 16GB RAM 22TB HDD fileserver

Dual Intel Xeon E5620 workstation

SB 2600K @ 5016MHz 1.37v HT on AIR primestable

AMD Athlon X3 425 @ B25 4GHz+ AIR

AMD Athlon X2 6400+ @ 3811MHz AIR

AMD Athlon X2 3600+ @ 3200MHz AIR

AMD Athlon XP 1700+ @ 2714MHz AIR

Thermalright Ultra-120 Extreme

Corsair 8GB XMS3 2000MHz

ATI Radeon HD5850 @ 1000MHz+/1200MHz+

Windows 7 Enterprise x64

Corsair HX750W

Hm, I remember John saying that they will not use modules for marketing, it will be cores.

RiG1: Ryzen 7 1700 @4.0GHz 1.39V, Asus X370 Prime, G.Skill RipJaws 2x8GB 3200MHz CL14 Samsung B-die, TuL Vega 56 Stock, Samsung SS805 100GB SLC SDD (OS Drive) + 512GB Evo 850 SSD (2nd OS Drive) + 3TB Seagate + 1TB Seagate, BeQuiet PowerZone 1000W

RiG2: HTPC AMD A10-7850K APU, 2x8GB Kingstone HyperX 2400C12, AsRock FM2A88M Extreme4+, 128GB SSD + 640GB Samsung 7200, LG Blu-ray Recorder, Thermaltake BACH, Hiper 4M880 880W PSU

SmartPhone Samsung Galaxy S7 EDGE

XBONE paired with 55''Samsung LED 3D TV

@Module, cores:

It was even not a clear thing for AMD in the beginning.

1. The engineers developed BD and called a module "core" (in patents), since in their view they developed a much bigger core than 10h and added a copy of integer execution resources (which is basically similar to a simplified CPU - not x86 compatible it just works on already translated (decoded) instructions like a RISC CPU). The module could also be seen as a heavily optimized dual core processor. It basically has everything, while sharing those parts, where sharing makes sense.

2. Someone possibly thought that these small cores will perform much better than logical cores in a hypothetical SMT machine, so this needs to be marketed somehow.

What they are depends on the applied definitions of "core".

Bookmarks