Hi there,

I am about to create a RAID 0 array with 2 (two) WD6400AAKS hdd's on ASUS P5K-E WIFI/AP (ICHR9) motherboard..

The plan is to create 1st slice with 300gb and second 2nd slice with all remaining space.

Most of the data are small files with size ~5mb-6mb.

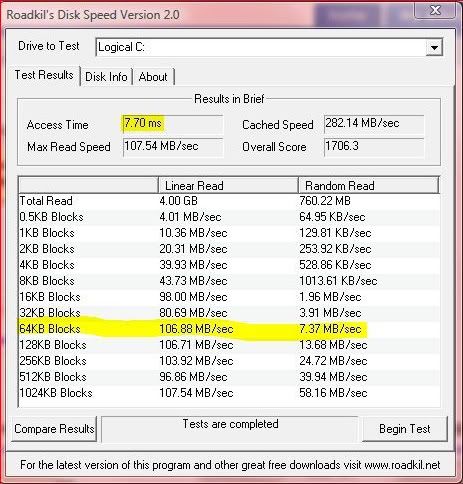

What stripe size should I chose in order to get the best all around performance? 64kb ? I am particularly interested in fastest possible boot times. The operating system shall be WinXP Pro.

Is it right that the smaller the stripe size the better the boot performance?

Reply With Quote

Reply With Quote

Bookmarks