Barrel or Multi-context processors have long existed in Supercomputers (CDC Cyber, etc) but for decades it has been ignored on the desktop because of a fascination for single thread performance. Which in a modern desktop is not desirable because the modern user typically is running several or more tasks at any given second. (Web Browser, music, operating system, gui, etc) The BeOS is probably the best example of how threading improves the user experience, since if one small task is delayed the user isn't going to be worried since the rest of the system is still humming along. In contrast with a single thread processor, that if a process gets stuck in a simple loop it can halt the entire system (until preempted)

A Barrel processor takes that simple logic to the extreme and turns a single processor into dozens of virtual processors running at a fraction of the speed with a significant benefits:

1) No forward feed circuits [Significant reduction in die space]

2) All instructions execute in exactly 1 clock cycle [Compiler design simplified and processor logic significantly simplified]

3) Significantly less Cache Sensitive [ 20 cycle L1 Cache would have the same performance as 1 cycle Cache]

4) Performance significantly easier to quantify [Threads * Clock speed/pipeline length] and double [Double clock speed will give exactly double performance since there are no pipeline stalls, bubbles, or wasted clock cycles]

5) Would promote more efficient use of Memory [since the modern practice of loop unrolling would have no benefit and significant deemphasis of the stack]

6) Superior user experience [Makes it possible for each application to have its own dedicated processor and significantly reduces the need for preemption and context switches]

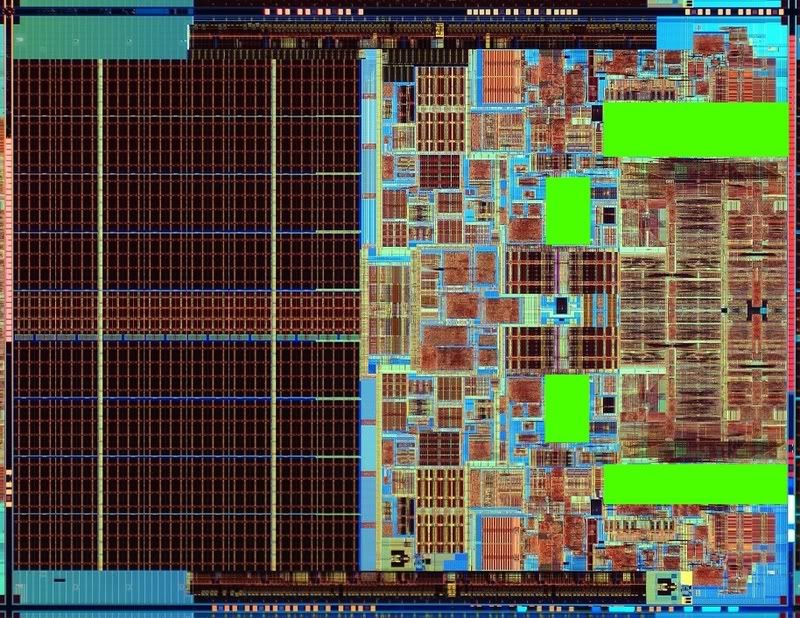

Since I figure we might as well do a dramatic example, if Conroe was modified into a barrel processor it would run 56 threads of which each integer instruction will take 1 clock cycle and Each SIMD instruction would take 1.2 Clock cycles. When stripped to bare Execution logic, it would only take up only 12% of the transistors currently being used in Conroe and would effectively just be the green Regions in this picture(and any extra logic required for connections) :

However there are draw backs:

1) Each thread would effectively run at 300Mhz

2) Adding Cache and the associated logic would only slow the processor down

3) Modern Operating systems such as Windows and Linux would waste some of the efficiencies because their preemption cycles are too short.

4) Excessive demand for Memory bandwidth [IMC would be required for efficient performance]

5) The poor design choices of previous years would hamper performance for some applications [But not games nor small applications]

Reply With Quote

Reply With Quote

Bookmarks