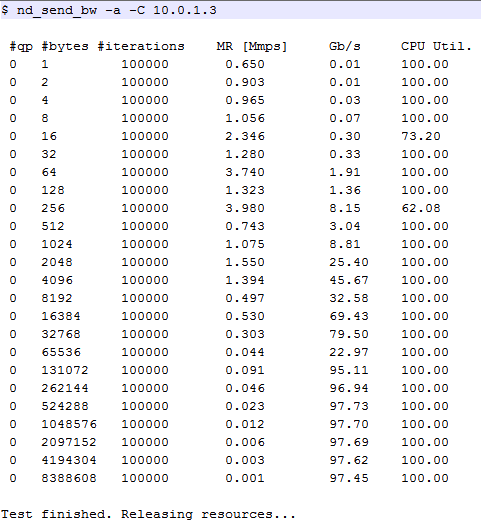

100 Gbps 4x EDR IB

There wasn't really a section in the forum for network bandwidth benchmarking, so I had to just put it here instead.

You can see the results from my benchmarking my new 100 Gbps 4x EDR IB network.

Please note that the results are in gigabits per second, which corresponds to a peak of around 12 gigaBYTES per second NETWORK transfer.

(For your reference, a single 6 Gbps SATA SSD is good only up to 750 MB/s transfer. This would be like having 12 SATA 6 Gbps SSDs tied together in RAID0 for peak bandwidth performance and this is running on my NETWORK right now.)

I actually had difficulties benchmarking this because even a 100 GB RAM drive wasn't able to test the peak transfer speeds because the RAM drive wasn't fast enough. (The RAM drive topped out at around ~9-ish Gbps.)

Thanks.

Reply With Quote

Reply With Quote

Bookmarks