Why play with max details if that doesn't give you enough fps? Ultimately it's about fps - turning down settings is not a crime

Imagine this case (at max details):

Some people need 100+ fps in BF3 for competitive online play. Why should they play at 2560x1600 with maximum details and TrSSAA if their GPU(s) cannot get them those 100fps? That makes no sense. This applies to all games. I'm not saying people should reduce their settings because fps drop below a certain threshold

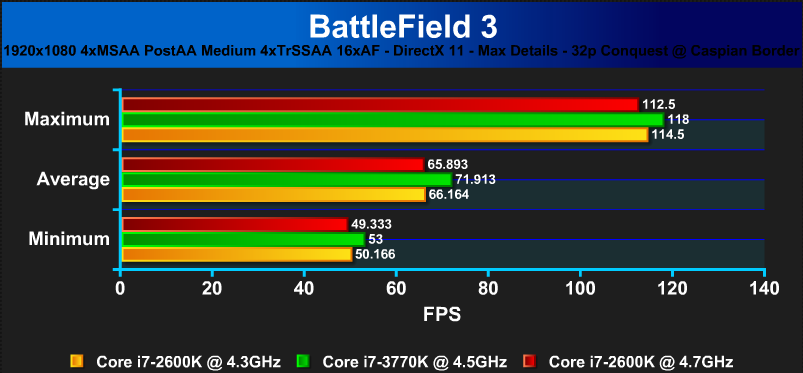

sometimes. I'm saying, a benchmark should show that. Benchmarking CPUs in GPU bottlenecks is a waste of time since it doesn't give you any new information. Your BF3 benchmark may be fine for someone who only needs around 60fps, but for others who need more, it is useless.

Since games can be GPU or CPU bottlenecked depending on where you are in the game, what you're doing and what kind of fps you need, you always need two benchmarks. One that shows what your CPU can ultimately do and one separate benchmark for the GPU. One single benchmark will

never give you all the information you need. Only by combining these two benchmarks you can really gauge the performance you can get from a certain CPU/GPU combo in all relevant cases.

If we take the example from above:

Alt-tabbing can lead to stability problems, that much is true. But it doesn't affect performance in most cases. It's so easy to test that - alt-tab out and in of the game 2 or 3 times and see if the fps stay the same (in a static scene of course where other players or events don't skew results even if you do nothing). If the results are consistent, just go ahead and bench. But I agree with you that usually you should have a certain test environment. Savegames are best for that.

The results you linked to are worthless imo. For one thing, in-game benchmarks were used, but these often show much higher fps than you can actually see in the game. That is because not always is AI, pathfinding etc. computed in those benchmarks, but they are rather movies instead of real gameplay. For instance the GTA 4 ingame benchmark shows at least twice the fps that are common in the game. Very misleading. And secondly, on top of that you make sure due to the GPU bottleneck that relevant performance differences are just "hidden". That might be irrelevant in the case of 2600K vs 3770K since the differences would be very small anyway. But the method in general is just wrong.

Reply With Quote

Reply With Quote

), Juan J. Guerrero

), Juan J. Guerrero

Bookmarks