I still think BF3 performance is poor, has anyone else found this?

I still think BF3 performance is poor, has anyone else found this?

Bencher/Gamer(1) 4930K - Asus R4E - 2x R9 290x - G.skill Pi 2200c7 or Team 2400LV 4x4GB - EK Supreme HF - SR1-420 - Qnix 2560x1440

Netbox AMD 5600K - Gigabyte mitx - Aten DVI/USB/120Hz KVM

PB 1xTitan=16453(3D11), 1xGTX680=13343(3D11), 1x GTX580=8733(3D11)38000(3D06) 1x7970=12059(3D11)40000(vantage)395k(AM3) Folding for team 24

AUSTRALIAN DRAG RACING http://www.youtube.com/watch?v=OFsbfEIy3Yw

I had high framerates, but poor performance (ftp looked like they were going really low sometimes, even though this was not the case) in BF3 with my Geforce Titan. However after a lot of testing I found that at least on my system, the cause of the mircostutter etc was the Asus AI 2 Suite. I have removed this SW now, and everything is really smooth.

Asus Z87 Deluxe, 4770K,Noctua NH-D14, Crucial 16 GB DDR3-1600, Geforce Titan, ASUS DRW-24B3ST, Crucial M500 960GB, Crucial M4 256GB, 3 X Seagate 4TB, Lamptron FC5 V2 Fancontroller, Noctua Casefans, Antec P183 Black, Asus Essence STX, Corsair AX860i, Corsair SP2500 speakers, Logitech Illuminated Keyboard, Win7 Home Pro 64 bit + Win 8.1 Home 64 bit Dual boot, ASUS VG278H

Really? How did you diagnose this? Maybe I should try a clean install with little extra and see how it goes. I am sure its no better than my GTX670 and my 680 Lightning was better (for me anyway, regardless of what reviews say).

Bencher/Gamer(1) 4930K - Asus R4E - 2x R9 290x - G.skill Pi 2200c7 or Team 2400LV 4x4GB - EK Supreme HF - SR1-420 - Qnix 2560x1440

Netbox AMD 5600K - Gigabyte mitx - Aten DVI/USB/120Hz KVM

PB 1xTitan=16453(3D11), 1xGTX680=13343(3D11), 1x GTX580=8733(3D11)38000(3D06) 1x7970=12059(3D11)40000(vantage)395k(AM3) Folding for team 24

AUSTRALIAN DRAG RACING http://www.youtube.com/watch?v=OFsbfEIy3Yw

Well, when I was testing the new GPU in my system I saw that my framerates were high in BF3, but it didn't look like they were. So I ran OCCT. This showed some weird things. Core 0 ran in a fraction of a second too hot (from like 40 C to about 80, other cores stayed at 40-45 C), and the benchmark stopped. I was thinking either the CPU or motherboard was faulty or there is something interfering with the readings.

The only thing I could think of on my system that could do this was the Asus AI 2 Suite. Even though I thought I was not really running anything with it (I had deselected all the flags in that program), I saw it was still running in the background. So I threw it off my system, and heay presto!!! No more weird readings in OTTC and BF3 is running great!

Asus Z87 Deluxe, 4770K,Noctua NH-D14, Crucial 16 GB DDR3-1600, Geforce Titan, ASUS DRW-24B3ST, Crucial M500 960GB, Crucial M4 256GB, 3 X Seagate 4TB, Lamptron FC5 V2 Fancontroller, Noctua Casefans, Antec P183 Black, Asus Essence STX, Corsair AX860i, Corsair SP2500 speakers, Logitech Illuminated Keyboard, Win7 Home Pro 64 bit + Win 8.1 Home 64 bit Dual boot, ASUS VG278H

I do have the cards for 2 weeks now. I can't share a review, but some experiences.

The Coolermaster HAF case is a good fit

10.752 shader cores

The hot zone

First, I don't do gaming, so please take one of the many sites for further information about the cards in a gaming setup.

Memory

I did use the cards in 2 different systems - a single LGA-2011 socket mobo and a dual LGA-2011 Xeon motherboard. The main difference is the available bandwidth over the PCI Express interconnect between cards and main memory. Due to the limitation of 40 PCI lanes per LGA-2011 socket, a 4 card setup has to utilize PCI-e x8 connections per card, limiting the available bandwidth to main memory to approx 5.6 GB/sec when PCI-e 3.0 is enabled (it is off by default).

The aggregated transferrate of the cards is above 20 GB/sec to main memory. Given that 1600 MHz main memory in an LGA-2011 system achieves 40 GB/sec, it would be easy to assume, that 50% of the available memory bandwidth is taken by the I/O operations of the cards and another 50% is still available to the CPU. There is one caveat though - cache consistency. By the very design of current motherboards, a PCI transaction flushes the respective line in the cache to avoid any inconsistency between main memory and cache. This requires the CPU to load the dataset not from cache, but from main memory - which is significantly slower.

To cut a long story short. If 4 cards are not only crunching with data within the cards, but need to regularily transfer data to the main system, most systems will exhibit performance limitations on the CPU/memory side to feed the cards appropriately fast.

The situation is much more "stable" on the dual Xeon side. Out of the 80 PCIe 3.0 lanes the 2 sockets provide, some motherboards make up to 72 lanes available in the 6 or 7 PCI slots. In a balanced system, 2 times 2 PCI slots with x16 connects each are available to unleash more bandwidth between cards and main memory. in x16 mode PCI 3.0, data transfer rates per card are in the range of 11.2 GB/sec read or write.

Energy

Running 4 cards at maximum TDP stretched my Silverstone 1200W PSU beyond its capabilities. I had to replace it with a 1500W which provides enough stability and headroom for quick power surges (caused by sudden load changes). Highest power consumption observed was (single socket system i7-3930K):1180Watt, dual E5-2687W Xeon: 1460 Watt.

Idle power draw on the single socket system with 4 cards: 120 Watt

Used as pure compute cards, power consumption goes down by 20-40 Watt (Texture units and ROP units aren't used), adding headroom to the TDP and temp budgets.

Temperature

For noise insensitive people, basically a non-issue. By default the fans of the cards max out at 60% of its max rpm. This speed keep the whole system silent enough for office noise levels to not disturb anybody. But cards hit under load temperatures above 80 degrees with stock settings. Cranking up the fanspeed to 85% (4400 rpm, and the max setting in EVGA precision 4.0), temperature goes down by 20-25 degrees. A good thing. The bad thing: Noise levels are very high. Nothing somebody would like to experience for hours. In my case, the solution was easy - move the rig in the basement. It doesn't make any difference where numbers are calculated.

The first few days I was careful with regards how far a sustainable load for hours and days would be a good from a power,temp,lifecycle balance side.

I kept the cards at stock frequency, cranked up the fans to 70% to maintain the temps under 70-75 degrees. The cards are very effective to avoid heating up the case. Behind the case it is very hot, but RAM and system board temperature barely increased.

Over a period of 5 days I kept the cards running with higher settings:

Increased shader frequency by 260 MHz to 1097 MHz

Memory + 160 MHz to 6320 MHz

Fan to 85%

TDP to 106%

Voltage: default 1,162V

max temp set to 80 degrees, cards were between 65 and 75 degrees, depending on load.

rock solid experience. Not a single glitch.

You might ask: Why Titan's?

For me, 2 reasons why I waited and chose GK110 cards.

It is the only Geforce card with CUDA compute capability 3.5 (GTX 680, 690 are on 3.0). This is one step further to simplify programming and increase performance in simpler ways by reducing the need for execessive roundtrips between GPU and the CPU.

1,5 TFlop/s double precision performance ( > 6 TFlop/s per system with embarrasingly parallel workloads)

What's missing:

Currently, NVidia is only supporting OpenCL 1.1, but not OpenCL 1.2

A lock to fix the cards to the case. They tend to disappear eventually and end up temporarily in my son's gaming system ....

Cheers,

Andy

Last edited by Andreas; 03-29-2013 at 11:32 AM.

I have not yet seen the review of the Titan card comparing it's Bitcoin performance against the AMD cards. Is it still weak like the other Nvidia GPU's?

Asus Z87 Deluxe, 4770K,Noctua NH-D14, Crucial 16 GB DDR3-1600, Geforce Titan, ASUS DRW-24B3ST, Crucial M500 960GB, Crucial M4 256GB, 3 X Seagate 4TB, Lamptron FC5 V2 Fancontroller, Noctua Casefans, Antec P183 Black, Asus Essence STX, Corsair AX860i, Corsair SP2500 speakers, Logitech Illuminated Keyboard, Win7 Home Pro 64 bit + Win 8.1 Home 64 bit Dual boot, ASUS VG278H

https://en.bitcoin.it/wiki/Why_a_GPU...ter_than_a_CPU

Please scroll down for an explanation

Forgot to mention:

NVidia added the missing logic and shift instruction in Version 3.1 of the instruction set (PTX-ISA 3.1) available in SM 3.5. It will take some time, when new CUDA kernels from the respective applications will be available which take benefit of this extension.

Andy

Last edited by Andreas; 03-29-2013 at 12:26 PM.

I don't know the cost of the dedicated hardware but I can tell you when I recently sold my 5970s on that big auction site a ton of bitcoin miners were bidding.

slowpoke:

mm ascension

gigabyte x58a-ud7

980x@4.4ghz (29x152) 1.392 vcore 24/7

corsair dominator gt 6gb 1824mhz 7-7-7-19

2xEVGA GTX TITAN

os: Crucial C300 256GB 3R0 on Intel ICH10R

storage: samsung 2tb f3

cooling:

loop1: mcp350>pa120.4>ek supreme hf

loop2: mcp355>2xpa120.3>>ek nb/sb

22x scythe s-flex "F"

The Bitcoin ship has sailed.

Using NVIDIA or AMD cards to mine coins is not going to be very profitable.

You are now competing with THIS: https://products.butterflylabs.com/h...oin-miner.html

The TITAN is useful for hardcore extreme gamers, and people who use GPGPU, especially that second group. TITAN would be great for science work like brain mapping, weather simulation, nuclear implosion modeling etc. etc.

If you are buying TITAN to mine bitcoins you are making poor choices.

However that is not the point, all these reviews are wrong/incorrect. The new drivers NVIDIA released on the 25th of March drastically improve TITAN performance, and the old reviews do not reflect the gaming power of the chip.

Two TITANS in SLI will probably last you through the entire PS4/XBOX Next gaming generation. I can play anything maxed at almost 4K reolution in DX11 Ultimate modes (5760x1200).

Plain 1080P would be a joke!

Intel Core i7-4930K 6 cores 22nm @ 4.2Ghz

EVGA NVIDIA 780Ti KINGPIN EDITION @1.4 Ghz

ACER 2560x1440 LED Monitor (soon to be 4K)

32GB Corsair DDR3 @ 2.6Ghz 9-12-12-30-2N

ASUS X-79-DELUXE USB 3.0 and SATA 6.0Gbps

DVD-RW 52x / BD-RW 8x

2x 480GB SSD w/TRIM at SATA III

1x 1TB WD Black

2x 300GB 10K RPM Velociraptor

1x 4TB WD Green (data)

1200Watt PC Power and Cooling PSU

Lian Li Super Tower Solid Black Aluminum Case, Insulated by Hand

Hand Made H20 Cooling, 3x 92mm Blade Master Fans on RAD

CORSAIR Metal Mechanical KB - Razer Naga Epic

KEF X300A Wireless Speakers (just added! - http://www.kef.com/html/us/showroom/...0aw/index.html)

MULTIBOOT - UBUNTU Linux, Linux MINT, Windows 7 64bit ULTIMATE (Windows 8 stay far away!)

24x7 ROCK SOLID 12 THREAD CPU/MEM TORTURE TESTED PRIME 95

Eh... $29,899.00

Amd is better at bitcoin mining than nVidia anyways btw...

X2 555 @ B55 @ 4050 1.4v, NB @ 2700 1.35v Fuzion V1

Gigabyte 890gpa-ud3h v2.1

HD6950 2GB swiftech MCW60 @ 1000mhz, 1.168v 1515mhz memory

Corsair Vengeance 2x4GB 1866 cas 9 @ 1800 8.9.8.27.41 1T 110ns 1.605v

C300 64GB, 2X Seagate barracuda green LP 2TB, Essence STX, Zalman ZM750-HP

DDC 3.2/petras, PA120.3 ek-res400, Stackers STC-01,

Dell U2412m, G110, G9x, Razer Scarab

I linked to a $30k monster to show what you are up against running a GPU trying to software mine bitcoins.

If you understand how they work at all, you know that with machines like that (and there are also $500 ASICS) - well just with ASIC hardware bitcoin miners proliferating - you won't be able to ever make back even a fraction of the cost of electricity used with a GPU to mine coins now no matter what AMD GPU is used. ASICS rule, and the difficulty is going to skyrocket.

That being said, TITAN was way under-rated in ALL reviews. Just trying to get the word out there that the new drivers are adding 10%-50% FPS increase in most games. If you want to know TITANS true value you need to add 10FPS at least to just about every score. It puts it into a class that is truly untouchable by AMD or 690 GTX.

SLI TITAN will rule handsomely well into 2013, I will not be surprised if it stands up to next gen 780 series, giving TITAN owners no reason to upgrade! Also I don't understand why these were not stock clocked at 1GHZ.

Intel Core i7-4930K 6 cores 22nm @ 4.2Ghz

EVGA NVIDIA 780Ti KINGPIN EDITION @1.4 Ghz

ACER 2560x1440 LED Monitor (soon to be 4K)

32GB Corsair DDR3 @ 2.6Ghz 9-12-12-30-2N

ASUS X-79-DELUXE USB 3.0 and SATA 6.0Gbps

DVD-RW 52x / BD-RW 8x

2x 480GB SSD w/TRIM at SATA III

1x 1TB WD Black

2x 300GB 10K RPM Velociraptor

1x 4TB WD Green (data)

1200Watt PC Power and Cooling PSU

Lian Li Super Tower Solid Black Aluminum Case, Insulated by Hand

Hand Made H20 Cooling, 3x 92mm Blade Master Fans on RAD

CORSAIR Metal Mechanical KB - Razer Naga Epic

KEF X300A Wireless Speakers (just added! - http://www.kef.com/html/us/showroom/...0aw/index.html)

MULTIBOOT - UBUNTU Linux, Linux MINT, Windows 7 64bit ULTIMATE (Windows 8 stay far away!)

24x7 ROCK SOLID 12 THREAD CPU/MEM TORTURE TESTED PRIME 95

I keep seeing these references to how good the titan is with GPGPU but nobody ever really posts numbers......

All along the watchtower the watchmen watch the eternal return.

Furmark isnt really much of a GPGPU benchmark.

edit

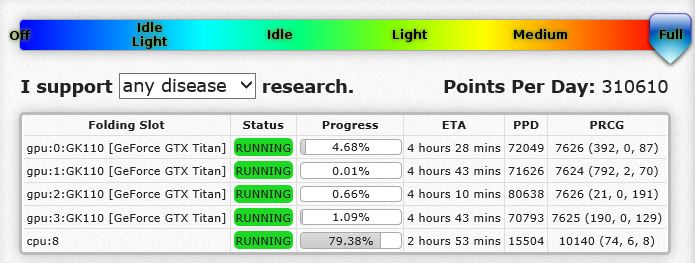

Looks like 66-70k for F@H, 3x a GTX580/680 (~20-22k). Good increase there at least.

Last edited by STEvil; 04-01-2013 at 08:39 PM.

All along the watchtower the watchmen watch the eternal return.

http://www.hwinfo.com/forum/Thread-H...-Beta-releasedAdded nVidia GK110 models: GeForce GTX Titan LE

Bencher/Gamer(1) 4930K - Asus R4E - 2x R9 290x - G.skill Pi 2200c7 or Team 2400LV 4x4GB - EK Supreme HF - SR1-420 - Qnix 2560x1440

Netbox AMD 5600K - Gigabyte mitx - Aten DVI/USB/120Hz KVM

PB 1xTitan=16453(3D11), 1xGTX680=13343(3D11), 1x GTX580=8733(3D11)38000(3D06) 1x7970=12059(3D11)40000(vantage)395k(AM3) Folding for team 24

AUSTRALIAN DRAG RACING http://www.youtube.com/watch?v=OFsbfEIy3Yw

"There have been several rumors for a while now that NVIDIA would be building an LE version of the GeForce GTX Titan. We can now expand that even further, as it seems there will be two new models release. An even faster Ultra edition and thus the cheaper LE edition. German website 3dcenter claims that the GeForce GTX TITAN LE has 2496 Shader cores, 208 TMUs, 40 ROPs, and a 320-bit wide memory interface (5120 MB memory.) The tertiary GK110 product would then be the GeForce GTX TITAN Ultra.

The Ultra would be a small upgrade for the 'regular' Titan, opening up one disabled SMX cluster bringing 2880 Shader cores with 240 TMUs towards the Ultra.

There remains a lot of speculation of course, but this could mean the Titan LE at 599 USD. The Original Titan at 799 USD, and the GeForce Titan Ultra at 999 USD."

http://www.guru3d.com/news_story/nvi..._titan_le.html

Very keen!

-PB

-Project Sakura-

Intel i7 860 @ 4.0Ghz, Asus Maximus III Formula, 8GB G-Skill Ripjaws X F3 (@ 1600Mhz), 2x GTX 295 Quad SLI

2x 120GB OCZ Vertex 2 RAID 0, OCZ ZX 1000W, NZXT Phantom (Pink), Dell SX2210T Touch Screen, Windows 8.1 Pro

Koolance RP-401X2 1.1 (w/ Swiftech MCP35X), XSPC EX420, XSPC X-Flow 240, DT Sniper, EK-FC 295s (w/ RAM Blocks), Enzotech M3F Mosfet+NB/SB

I'm skeptical of the standard Titan pricing rofl!

Seems like this is the stop gap until 7XX series later this year.

If they're going to make 3-4 cards out of the name, why not just call it 7XX to begin with lol >.<

-PB

-Project Sakura-

Intel i7 860 @ 4.0Ghz, Asus Maximus III Formula, 8GB G-Skill Ripjaws X F3 (@ 1600Mhz), 2x GTX 295 Quad SLI

2x 120GB OCZ Vertex 2 RAID 0, OCZ ZX 1000W, NZXT Phantom (Pink), Dell SX2210T Touch Screen, Windows 8.1 Pro

Koolance RP-401X2 1.1 (w/ Swiftech MCP35X), XSPC EX420, XSPC X-Flow 240, DT Sniper, EK-FC 295s (w/ RAM Blocks), Enzotech M3F Mosfet+NB/SB

lol, I laugh at Titan owners if Nv drop value!, Nv if you want to Release new version and drop Titan's Price to lower , wouldn't you bother for someone tell you :

but Hope Nv wont touch their Price in other to respect consumers who have Titan.I Just bought Two TITAN cards , Now there is an ultra version, coming soon , Fu!!!!!!! you NVIDIA!

CPU : Athlon X2 7850,Clock:3000 at 1.20 | Mobo : Biostar TA790GX A2+ Rev 5.1 | PSU : Green GP535A | VGA : Sapphire 5770 Clock:910,Memory:1300 | Memory : Patriot 2x2 GB DDR2 800 CL 5-5-5-15 | LCD : AOC 931Sw

I have my doubts that people who buy the absolutely hottest hardware available think it wont depreciate like a rock falling in perfect vacuum? ^^

Aber ja, naturlich Hans nass ist, er steht unter einem Wasserfall - James May

Hardware: Gigabyte GA-Z87M-D3H, Intel i5 4670k @ 4GHz, Crucial DDR3 BallistiX, Asus GTX 770 DirectCU II, Corsair HX 650W, Samsung 830 256GB, Silverstone Precision -|- Cooling: Noctua NH-C12P SE14

On a second note:

You might be interested in this post:

http://www.xtremesystems.org/forums/...t=#post5183120

Meanwhile max PPD rates have been going up.

Originally Posted by motown_steve

Every genocide that was committed during the 20th century has been preceded by the disarmament of the target population. Once the government outlaws your guns your life becomes a luxury afforded to you by the state. You become a tool to benefit the state. Should you cease to benefit the state or even worse become an annoyance or even a hindrance to the state then your life becomes more trouble than it is worth.

Once the government outlaws your guns your life is forfeit. You're already dead, it's just a question of when they are going to get around to you.

Bookmarks