Hi guys,

Saw that you guys did xtreme builds and I'd think my build's a little xtreme too so it'd probably qualify.

Basically I've this setup which seems to be grossly underperforming in terms of read/write speed even though I've chucked in quite abit of decent stuff.

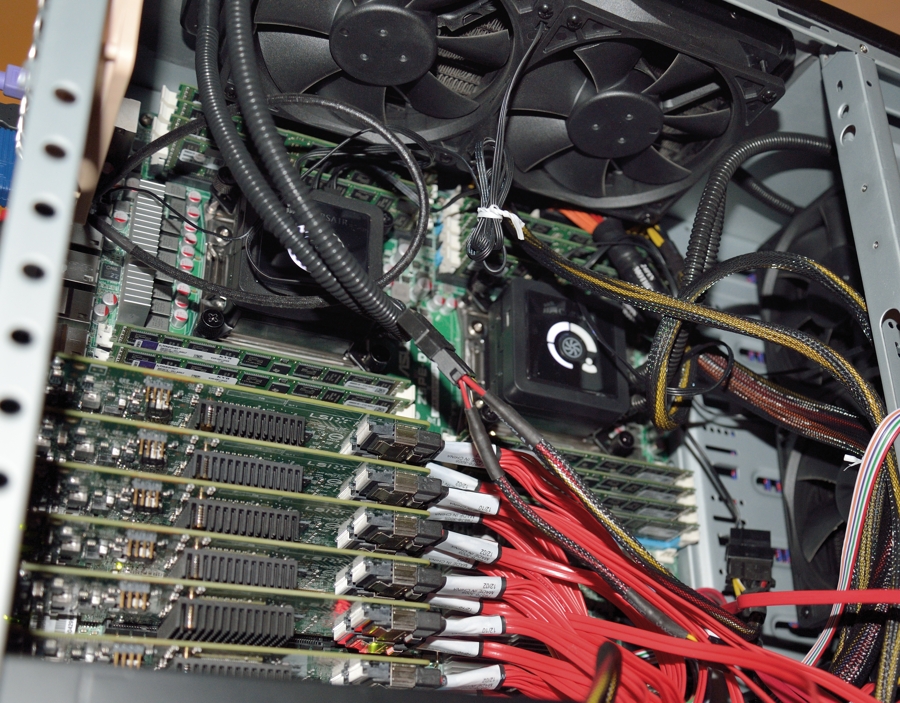

I'm using 8 x Samsung 830 256GB's (I've 10 more new ones waiting to go into the chassis, though.) and an Areca 1882-ix-16 (which supports 16 internal + 4 external ports) running in RAID0.

The chassis is a barebone Supermicro SYS-2027R-N3RFT+ (http://www.supermicro.com/products/s...27R-N3RFT_.cfm) and I've 8 x 16GB Hynix DDR3 1066 ECC REG PC3-8500R (128GB in total) and 2 x Intel Xeon E5-2620 CPU's.

System is running VMware ESXi as host OS but I do not believe that it's the bottleneck - a simple dd test done within ESXi's Linux Console shows this:

vmfs/volumes/500768db-efaf45e0-45a1-0025907a08fb # time sh -c "dd if=/dev/zero of=ddfile bs=8k count=2000000 && sync"

2000000+0 records in

2000000+0 records out

real 0m 19.66s

user 0m 0.00s

sys 0m 0.00s

That's around 813.83 MB/s.. which is horribly slow considering 8 x Samsung 830's should average around 3GB+/s?

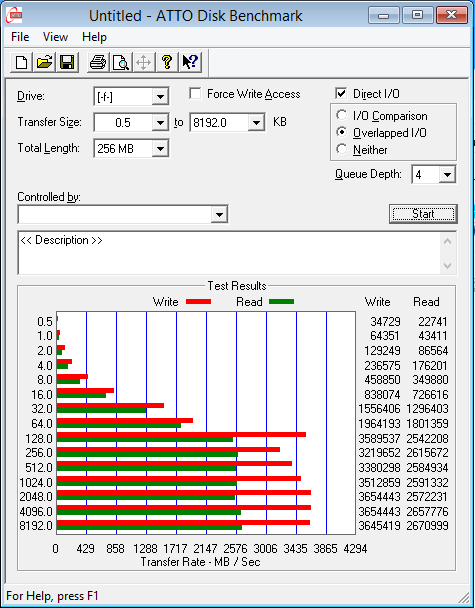

Here're more tests from within a Windows 2008 R2 VM running inside VMware ESXi:

What could possibly have gone wrong to incur such low speeds?

Reply With Quote

Reply With Quote

Bookmarks