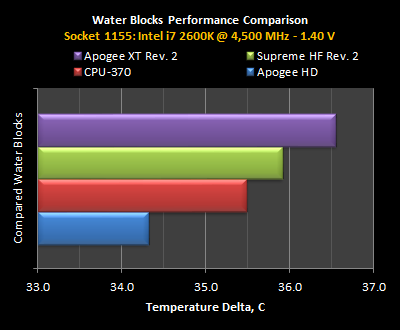

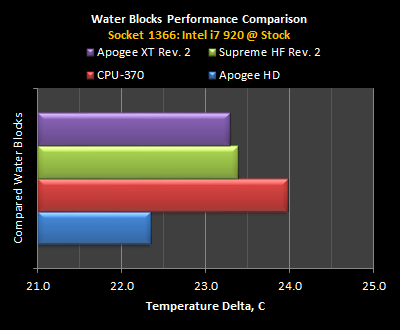

The Apogee™ HD is Swiftech's new flagship CPU waterblock.

Close to 18 months in the making, it was designed to surpass its predecessor the Apogee™ XT in all critical areas:

- Improved thermal performance with emphasis on Intel® and AMD® latest and upcoming processors: the HD is Socket LGA2011 (Intel®) and "Bulldozer" (AMD®) ready.

- Reduced flow restriction compared to the Apogee XT Rev2.

- Innovative features, with the introduction of the multi-port connectivity: two more outlet ports have been added for dramatic flow rate improvements in multiple waterblock configurations when used with the new MCRx20 Drive Rev3 radiators that now also include two additional inlet ports (3 total);

- Improved thermal performance out of the box with the inclusion of high performance PK1 thermal compound.

- Cosmetic appeal: the Apogee HD is now available in two colors, Classic black or Fashionable white to match high-end case offerings from NZXT™, Silverstone®, Thermaltake and many others.

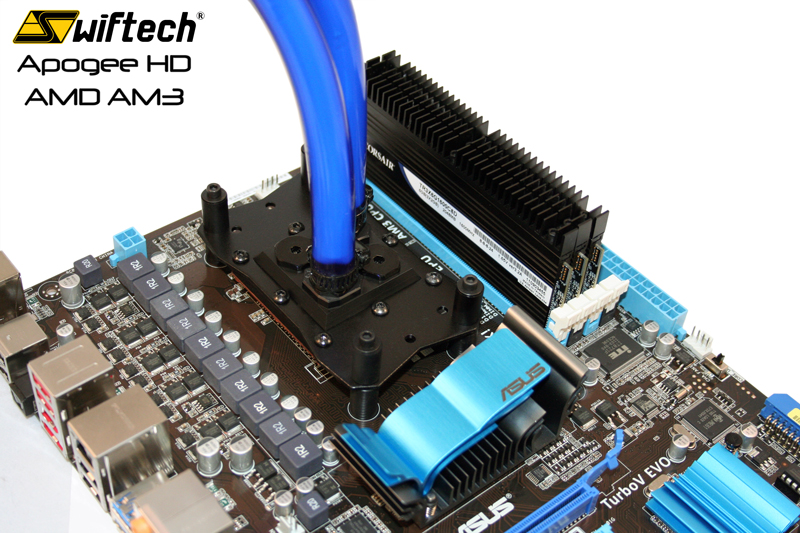

- Elegant multi-mount hold-down plate for AMD Processors

- Reduced cost of ownership: $74.95 MSRP

Compatibility:

Intel

The stock hold-down plate is compatible with all Intel Desktop Processors: 775, 1155, 1156, 1366, 2011

Motherboard backplates:

- 1155/56 & 1366 included

- 775 sent free of charge upon request

- Mounting screws springs for socket 2011 will be made available soon

AMD

A mounting kit for AMD® processors, sockets 754, 939, 940, AM2, AM3, 770, F, FM1 and legacy Intel® Server socket 771 is available and shipped to users free (shipping not included) upon request.

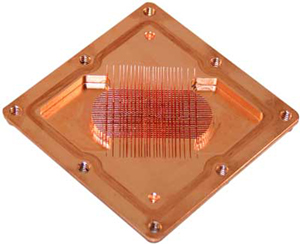

Internals:

The housing is precision-machined from black or white polyacetal copolymer (POM).

The base-plate is precision-machined from C110 copper. Thermal design of the cooling engine is characterized by Swiftech's fin/pin matrix composed of 225 µm (0.009") micro structures; the matrix has been further refined with variable width cross channels to improve flow rate without affecting thermal efficiency, and fabrication quality has also been enhanced to reduce micro machining imperfections.

The mating surface to the CPU is mirror polished, in full compliance with Swiftech's highest quality standards

Flow Parallelization: "How to create a mixed serial+parallel configuration in complex loops for dramatically improved flow performance."

I am reprinting below the entire text listed on our site under this chapter:

Among the most obvious benefits of harnessing the power of water-cooling is the ability to daisy-chain multiple devices for the CPU, Graphics, Chipset, and even memory.

Up until now, the most common way to do this has been to connect the waterblocks in series. In this type of configuration however, the pressure drop generated by each one of the devices cumulates, which substantially reduces the overall flow rate in the loop; and as the flow rate diminishes, so does the thermal performance of the system. Many extreme users have been resorting to adding a second pump to their system to mitigate this effect.

There is another strategy to connect multiple water blocks: the parallel configuration. It is very advantageous because in this type of setup, when two devices are parallelized, the flow is divided in half, but the pressure drop is divided by a factor of four, thus alleviating the need for a second pump. However, it necessitates splitting the main line using Y connectors, and it is seldom used because connectivity is awkward and cumbersome.

Enter the Multi-port Apogee™ HD waterblock, and MCR Drive Rev3 Radiators. With two additional outlet ports for the Apogee™ HD and two additional inlet ports for the MCR Drive Rev3 radiator, it is now possible to conveniently setup a high flow multi-block loop without using splitters. We will show below that while it always remains preferable to keep the CPU waterblock in series with the main line whenever possible, all other electronic devices in the loop are perfect candidates for parallelization. The resulting configuration is a mixed serial + parallel setup, i.e. the best of both worlds!

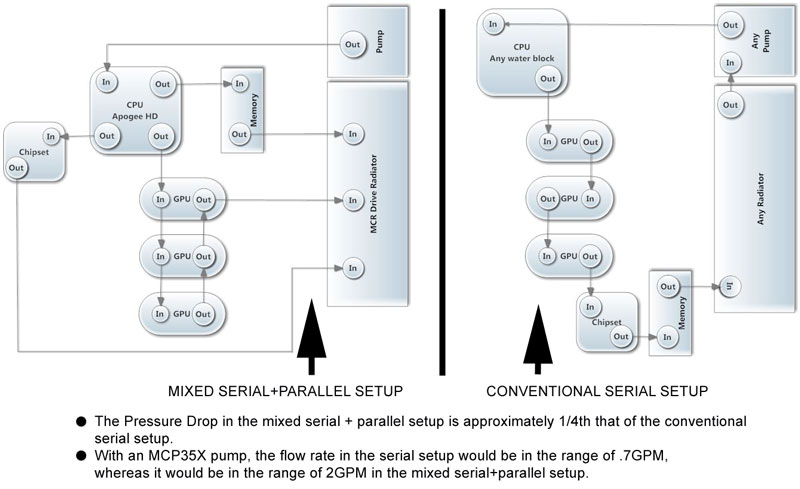

The following flow-charts illustrate two extreme setups (CPU + tripe SLI + chipset + memory) and quantify an order of magnitude in flow performance that can be gained from using a mixed serial + parallel configuration:

As mentioned earlier however, the consequence of parallelizing cooling devices is that the flow rate inside of said devices is also divided, therefore slower. So we now need to introduce another concept to further qualify the rationale behind parallelization: the heat flux generated by the different electronic devices, i.e. the rate of heat energy that they transfer through a given surface.

CPU’s

• Modern CPUs generate a lot of heat (up to and sometimes higher than 200 W), which is transferred through a very small die surface (the die is the actual silicon, and it is usually protected by a metallic plate called a heat spreader or IHS). Among other things, what it means in practical terms is that higher flow rates will have relatively more impact on the CPU operating temperature than on any other devices. For this reason, and in most configurations, the Apogee™ HD CPU waterblock will preferably always be connected in series with the main line, so it can benefit from the highest possible flow rate.

ALL other devices except radiators

• GPUs, whether they have an IHS or not, also generate a lot of heat (sometimes even more than CPU’s). However the physical size of the dies is substantially larger than that of any desktop CPUs. The resulting lower heat flux makes GPUs much less sensitive to flow rate. In fact, when both are liquid cooled, we can readily observe that the GPU operating temperature is always much lower than that of the CPU. For this reason, it is 1/ always preferable to parallelize multiple graphics cards with each-other, and 2/ when one or more GPU blocks are used in conjunction with one or more other devices like chipset and/or memory, it is always beneficial to parallelize the GPU(s) with said devices using the Apogee™ HD multi-port option.

• Chipsets, Memory, Hard Drives and pretty much everything else one would want to liquid cool in a PC can also be placed in the same use-category as GPUs, either because they have a low or moderate heat flux, or because the total amount of heat emitted by the devices can be handled without sophisticated cooling techniques. What it boils down to, is that they are even less flow-sensitive, and we submit that parallelization of these blocks should in fact become a standard.

Radiators

The higher the flow rate inside of a radiator, the quicker it will dissipate heat. For this reason, radiators will always remain on the primary line, just like the CPU block, in order to benefit from the highest possible flow rate.

In conclusion, we can see that the multi-port Apogee™ HD when coupled with the MCR Drive Rev3 radiators makes a compelling case for optimizing complex loops: it maximizes the flow rate where it matters most (on the CPU, and radiator) while offering a splitter-free parallelization of up to three other components (GPUs, chipset, etc.).

Alternate configuration:

The Apogee™ HD allows an alternate configuration: by using the main outlet as an entry port instead of the inlet, you can then parallelize the CPU with up to two more components: a second CPU, a GPU, a Chipset, etc. While it remains true as explained earlier that CPUs benefit from higher flow rate than other components, the few degrees in performance gains might not be consequential to some users. In these situations then, using the “alternate” configuration could for example be beneficial as follows:

• When cooling two CPUs, it might be desirable to parallelize them in order to maintain the exact same temperature for each CPU.

• For one of the quickest upgrades ever: one could get started with a CPU-only loop, and use the alternate configuration initially. Then when installing additional water-blocks (graphics for example), all would be needed is to drain the liquid out, replace the plug(s) by fitting(s), and connect the tubes to the new device(s). There is no need to remove the Apogee HD, no need to remove and recut tubes to length: the existing loop doesn’t need to be modified.

Reply With Quote

Reply With Quote

(tho i never watercool the mb and ram)

(tho i never watercool the mb and ram)

Bookmarks