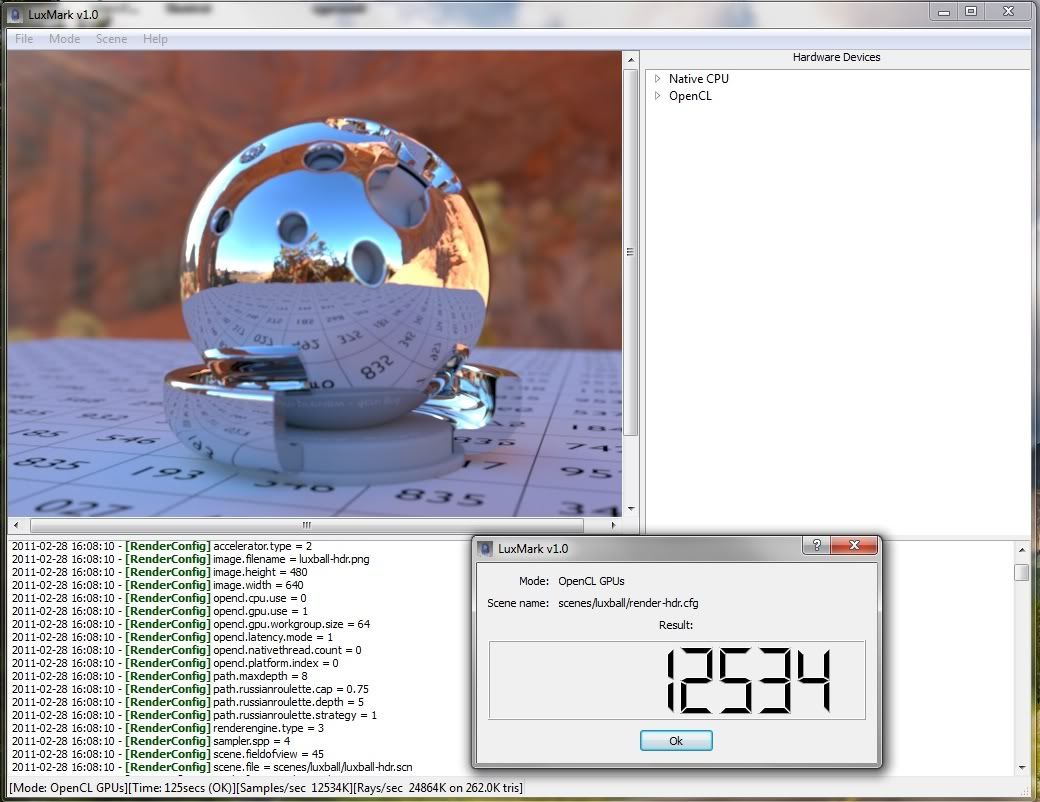

LuxMark is an OpenCL benchmark based on LuxRender. LuxRender is a physically based and unbiased rendering engine. Based on state of the art algorithms, LuxRender simulates the flow of light according to physical equations, thus producing realistic images of photographic quality - Gallery.

Info and Download

The default (start-up) benchmark mode is the GPU path.

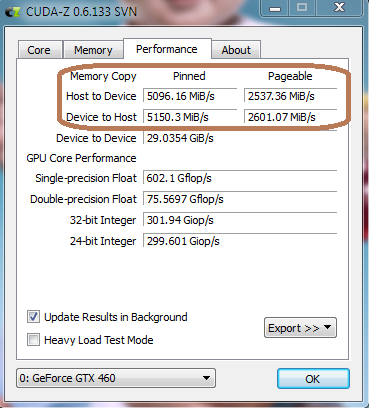

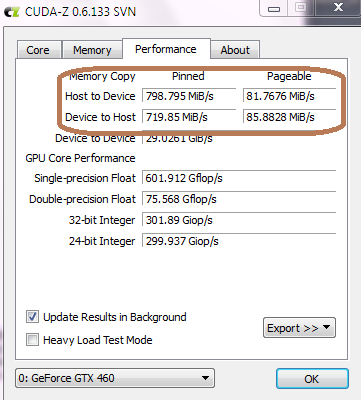

Here is the my GTX570 (825/2100MHz):

Happy benchmarking!

Reply With Quote

Reply With Quote

score goes to show how well youve implemented multi gpu support

score goes to show how well youve implemented multi gpu support

Bookmarks