Thread Summary

The objective of this thread was to understand how a SSD performed under typical desktop applications. HDD's are perceived as being the bottleneck in the system, so it is logical to assume that a faster storage system would have the potential to significantly improve overall system performance.

Enter the SSD.

Is storage still the bottleneck? What types of demand are put on the SSD and what are the performance characteristics of the SSD that matter? Finally how much of those key performance characteristics are being utilised?

The tools

hIOmon provides a sophisticated platform that can observe the following:

1. Observe I/O operations in action at three different critical points within the OS I/O stack: the file system level (essentially the application/process level), the physical volume level, and the physical device level. This can help provide for a more complete, overall picture of what actually occurs during I/O operation processing.

2. Selectively observe I/O operations at one or more of these three levels.

This can help identify those particular I/O operations that are, for instance, germane to only a single level, e.g., I/O operations that are satisfied directly by the use of the system file cache and thus are effectively limited to the file system level only, I/O operations that are issued by applications/programs directly to a device at the physical device level and without direct interaction with the file system level, etc.

3. Optionally observe I/O operations concurrently at two or more levels, and moreover correlating I/O operations as they traverse the different levels.

To help anyone interested in doing their own monitoring a fully functional trial can be downloaded here and a quick set up guide to get up and running quickly can be found in post #187

The set up (Unless otherwise stated).

Asus Rampage Extreme. ICH9. QX6850. 8GB RAM. Asus Xonar DX2.4870x2. 160GB X25-M x2 and 1 160GB Seagate Barracuda 7200

Summary of activities monitored

The operating system was Win 7/64. An image was made to compare performance between different storage configurations within the same system to try and provide comparable results. Typical applications included games, office, web browsing, WMP, Power DVD, Traktor and Photoshop.

OS I/O Stack

An example of I/O activity at each of the three levels that hIOmon can monitor can be found in Post # 329 and Post # 330

A summary of key device level and logical disk I/O activity monitored during game play with Black Ops SP in post #329 is provided below:

Device Level Reads

Data transferred = ~406MB (Incurred over 30 minutes of game play).

Total I/O's = 16,216

Max IOPs = 22.7.

FastIOPS 97.4 % (less than one millisecond).

Min xfer size = 1,024. Average xfer size = 26,317. Max xfer size = 262,144.

Min response time = 0.0416ms. Avg response time = 0.2179ms. Max response time = 10.4449ms

Maximum data transfer rate for a single I/O operation. = 268.59MB/s. (If done in less than one millisecond MB/s is technically "inflated").

File/ Logical Disk Level Reads

Data transferred = 2.35GB

Total I/O's = 193,016

Max IOPs = 152.9

FastIOPS 95.3 % (less than one millisecond).

Min xfer size = 0. Average xfer size = 13,662. Max xfer size = 262,144.

Min response time = 0.00416ms. Avg response time = 0.0584ms. Max response time = 10.5207ms

Maximum data transfer rate for a single I/O operation. = 2,801.833MB/s.

Device Level I/O Transfers.

I/O xfers at the device level post #336

Metrics from 3 different scenarios were captured.

Black OPS Single Player - Without AV

Black Ops Multi Player - Without AV

OS with just IE, Word, Excel & Live Mail - with AV

OS with just IE, Word, Excel & Live Mail

Read xfers

31,299 read I/O operations; total 674,738,176 bytes read

. Largest single I/O xfer size = 1.14MiB (1 occurrence)

. Smallest single I/O xfer size = 512 bytes (303 occurrences).

Write xfers

8,645 write I/O operations; total 98,129,920 bytes written

. Largest single I/O xfer size = 2MiB (2 occurrences)

. Smallest single I/O xfer size = 512 bytes (352 occurrences).

Highest read IOP Count xfer size = 32kB* (10,471 occurrences).

Highest write IOP Count xfer size = 4kB (6,134 occurrences).

*The high occurrences of 32kB xfers are possibly due to AV activity.

Black Ops Single Player

Read xfers

92,623 read I/O operations; total 1,772,378,112 bytes read

. Largest single I/O xfer size = 2.75 MiB (1 occurrence)

. Smallest single I/O xfer size = 512 bytes (305 occurrences).

Write xfers

6,697 write I/O operations; total 113,272,832 bytes written

. Largest single I/O xfer size = 2.75MiB (2 occurrences)

. Smallest single I/O xfer size = 512 bytes (245 occurrences).

Highest read IOP Count xfer size = 4kB (39,023 occurrences).

Highest write IOP Count xfer size = 4kB (5,018 occurrences).

Black Ops Multi Player

Read xfers

2,648 read I/O operations; total 318,201,856 bytes read

. Largest single I/O xfer size = 0.88 MiB (1 occurrence)

. Smallest single I/O xfer size = 512 bytes (12 occurrences).

Write xfers

6,299 write I/O operations; total 55,623,168 bytes written

. Largest single I/O xfer size = 0.88 MiB (1 occurrences)

. Smallest single I/O xfer size = 512 bytes (167 occurrences).

Highest read IOP Count xfer size = 72kB (1,010 occurrences).

Highest write IOP Count xfer size = 4kB (4,027 occurrences).

Boot up.

The bootup process involves three stages: (Please see post # 255 for more details)

1. Hardware enumeration to enable the OS loader to take control

2. Main Boot Path Time - Essential services and drivers to load desktop

3. Post boot time - Drivers, processes and applications that arent critical for user interaction and can be loaded with low-priority I/O that always gives preference to user-initiated actions that execute using Normal I/O priority.

By default the hIOmon software is configured to automatically begin monitoring when the services are started during stage three. There is also an option, however, whereby the hIOmon software can be configured so that it instead begins monitoring very early within the "Main Boot Path Time" (stage 2). Please see post #32 for details

The amount of data loading during the boot process can vary significantly depending on the type of applications that have been installed. The time it takes to fully load can also vary significantly depending on user-initiated actions, automatic updates and AV activity.

During boot all the data has to initially be read from the physical disk.

Typical read transfer sizes during boot can be found in post #190. The vast amount of read transfer sizes are 6,000 bytes and below. Only 16 read transfer sizes were above 1MB and the largest read transfer sizes was 11MB.

The boot process was monitored on three different storage systems. HDD, SSD & SSD Raid 0. (Please see post # 105).

Key storage load metrics (Approximated)

95% random reads and writes.

20,000 I/O operations overall.

Overall average IOPs = 190

Overall average transfer size 20,000 bytes (19KB)

Total read data transferred 420MB

Total write data transferred 24MB

Key storage performance metrics

HDD Percentage of fast read/ write IOPs performed = 1.2%

SSD Percentage of fast read/ write IOPs performed = 98.3%

SSD Raid 0 Percentage of fast read/ write IOPs performed = 98.9%

HDD busy time = 1min 58s

SSD busy time = 8.54s

SSD Raid 0 time = 6.59s

HDD average response time = 53.40ms

SSD average response time = 0.49ms

SSD Raid 0 average response time = 0.33ms

Whilst the performance difference between HDD & SSD is significant the difference between a single SSD and SSD Raid 0 is marginal.

Anvils post # 118 provides a comparison with no apps installed.

i7 920 UD7, 2R0 C300 64GB

Key storage load metrics

88.7% random reads and writes.

12,251 I/O operations overall.

Overall average transfer size 17,546 bytes

Total read data transferred 190MB

Total write data transferred 14MB

Key storage performance metrics

Percentage of fast read/ write IOPs performed 96.4%

Busy time 2.62s

Average response time 0.32ms

AMD 1090T - Samsung F3 HDD

Key storage load metrics

78.9% random reads and writes.

7,179 I/O operations overall.

Overall average transfer size 17,267 bytes

Total read data transferred 98MB

Total write data transferred 20MB

Key storage performance metrics

Percentage of fast read/ write IOPs performed 61.3%

Busy time 12.57s

Average response time 4.47ms

Whilst the total read data transferred differed significantly between the different storage systems that were monitored the random read percentages were all comparable. The overall average transfer sizes were also comparable.

Anvils Samsung F3 was lot faster than the HDD I monitored (Seagate Barracuda). New installs on HDD always seem quite fast at first, but they soon slow down as more data gets loaded over time and the drive becomes fragmented.

The impact of file cache.

After boot up frequently used data can be held in memory-resident file cache to speed up access that would otherwise be dependent on a read form the physical device. Access to files that are resident in memory is significantly faster than having to retrieve the files from the physical disk. This process helps mask the poor random small file access performance associated with HDD.

The impact of file cache can be found in post #223 and post #224. In post #218 it is evident that none of the small file transfers occurred on the physical disk.

An observable impact of cache with a large file transfer can be found in post #222. Better clarification of the observations can be found in post #223 & post #234.

For information on the impact of file cache with typical use a comparison of the top 10 read and write data transfers between those monitored on the physical device and those monitored overall can be found in post # 241 (reads) and post # 242 (writes).

System top read data transfer size = 24,877 occurrences of 40 bytes

Physical device top read data transfer size = 1,079 occurrences of 4,096 bytes

System top write data transfer size = 7,228 occurrences of 152 bytes

Physical device top write data transfer size = 450 occurrences of 512 bytes

For both reads and writes that occur on the physical device 0.5KB and 4KB file transfers dominate.

The percentage difference between overall writes and reads can be found in post # 244

Overall system reads = 93%

Physical device reads = 88%

The difference between total data transferred between overall data transfer and data transferred on the physical device can be found in post # 243.

Gaming Performance

Call Of Duty Black Ops - Single Player

Please see post #159 for graphs showing the data summarised below.

Maximum random sequential read speed = 97.92MB/s

Maximum random read speed = 26.33MB/s

Maximum random access IOP count = 13,750

Maximum Sequential Access IOP Count = 3,275

Fast Read IOP count = 98%

Average read queue depth = 1

Maximum read queue depth = 5

Call of Duty Black Ops Multi Player

Please see post #165 for graphs showing the data summarised below.

Maximum sequential read speed = 121.11MB/s

Maximum random read speed = 10.63MB/s

Maximum random access IOP count = 11,140

Maximum Sequential Access IOP Count = 10,379

Fast Read IOP count = 98%

Average read queue depth = 1

Maximum read queue depth = 4

For a comparison of key performance metrics between SSD & HDD whilst playing Black ops please see post #48

In this post the impact of cached files is observed by reloading the same map in multiplayer mode. The first load incurred ~60% of the total reads. The map had to be reloaded a further 9 times to get the 40% balance of reads.

Windows was able to satisfy a high percentage of I/O's specifically related to the Black Ops folder in cache, regardless of the storage medium

SSD without FancyCache = 86%

HDD without FancyCache = 85%

HDD was much slower at loading the first level but after that it was hard to tell the difference between SSD due the amount of I/O being served in cache.

Page File

The purpose of a page file is described in Wiki as:

[I]"In computer operating systems, paging is one of the memory-management schemes by which a computer can store and retrieve data from secondary storage for use in main memory. In the paging memory-management scheme, the operating system retrieves data from secondary storage in same-size blocks called pages. The main advantage of paging is that it allows the physical address space of a process to be noncontiguous. Before the time paging was used, systems had to fit whole programs into storage contiguously, which caused various storage and fragmentation problems.

Paging is an important part of virtual memory implementation in most contemporary general-purpose operating systems, allowing them to use disk storage for data that does not fit into physical random-access memory (RAM)".

According to a MSDN blog

...."most pagefile operations are small random reads or larger sequential writes, both of which are types of operations that SSDs handle well.

In looking at telemetry data from thousands of traces and focusing on pagefile reads and writes, we find that

Pagefile.sys reads outnumber pagefile.sys writes by about 40 to 1,

Pagefile.sys read sizes are typically quite small, with 67% less than or equal to 4 KB, and 88% less than 16 KB.

Pagefile.sys writes are relatively large, with 62% greater than or equal to 128 KB and 45% being exactly 1 MB in size.

In fact, given typical pagefile reference patterns and the favorable performance characteristics SSDs have on those patterns, there are few files better than the pagefile to place on an SSD."

In post #160 page file was activity monitored. The system had 8GB of RAM.

Key transfer metrics

Total read data transferred = 90.20MB

Total write data transferred = 280.81MB

Max read data transfer = 37MB

Max write data transfer = 118MB

Photoshop generated large writes, which did not appear to be read back. If the large writes generated by Photoshop are excluded the page file activity was representative of the telemetry data collected by MS.

For a comparison between boot up with the page file enabled and disabled please see post #92. With the page file disabled response times improved along with the percentage of fast IOPs counts.

Sequential speeds & multitasking

Sequential reads of typical apps can be found in post # 237

Play DVD = 12.67 MB/s

Rip DVD = 2.76 MB/s

Quick MS Essential AV scan = 30.71 MB/s

Zip a file = 6.34 MB/s

Black Op Single Player = 96.30 MB/s

Create HD video = 15.68 MB/s

Copy 600mb AVI file = 252.70 MB/s

Copy 1.3GB folder with various sized jpegs = 251.19 MB/s

An attempt to run multiple tasks to achieve the max sequential read speed of the SSD resulted in the CPU maxing out. (Please see post # 5)

For a comparison between multi tasking sequential read speeds obtained between SSD and HDD please see post #47

HDD Black Ops = 67.37MB/s

SSD Black Ops = 81.70MB/s

HDD Photoshop = 14.09MB/s

SSD Photoshop = 28.31MB/s

HDD MS Essentials = 11.36MB/s

SSD MS Essentials = 29.16MB/s

HDD PowerDVD = 11.90 MB/s

SSD PowerDVD = 16.26MB/s

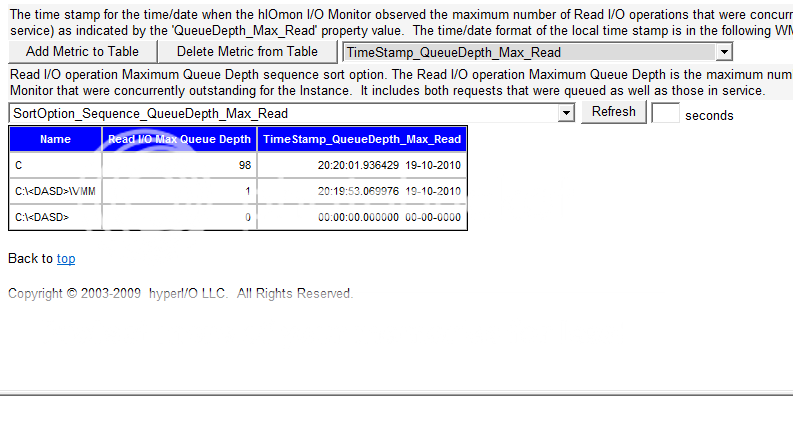

Queue Depths

Queue depths under different loads are summarised in post # 8

Avg Read QD heavy multi tasking - 1.057

Max Read QD heavy multi tasking - 4

Avg Read QD light multi tasking - 1.024

Max Read QD light multi tasking - 35

Black Ops single player read = 1.087

Black Ops single player write = 2.015

A summary of 2 days worth of data can be found in post # 24

Max average QD = 1.418

Max read QD = 18

Max average write QD = 2.431

Max write QD = 70

Intriguingly read QD's were generally lower on HDD when compared to SSD. Conversely write QDs were generally higher on HDD. Please see post # 47

Significantly higher queue depths were only observable when benchmarks were monitored.

IOPs Work in progress

IOPs can be approximated using the following formula:

Queue depth*(1/latency in ms) = IOPs

MB/s can be approximated using the following formula:

BytesPerSec = IOPS * Transfer size in bytes

IOP capability is therfore linked to three key variables:

- Transfer size

- Queue depth

- Latency

Average queue read depths, as observed above, are typically just over one. Most of the transfer sizes are 4,096 bytes or less. If these parameters are taken as a given the impact of latency becomes critical to IOP performance.

Based on the average latency of 0.01ms

Queue depth 1 x (1/0.00001) = 100,000 IOPS

Based on the average latency of 0.1ms

Queue depth 1 x (1/0.0001) = 10,000 IOPS

Based on the average latency of 0.2ms

Queue depth 1 x (1/0.0002) = 5,000 IOPS

Based on the average latency of 0.3ms

Queue depth 1 x (1/0.0003) = 3,333 IOPS

Based on the average latency of 0.49ms

Queue depth 1 x (1/0.00049) = 2,040 IOPS

Overall average IOPs rates observed (Please see post #94)

Boot up = 190 IOPs

Multi tasking = 70 IOPs

PCMark HDD Suite = 62 IOPs

VMWare = 130 IOPs

ATTO = 3,552 IOPS

It seems that IOP capability is significantly underutilised, which is a shame as it is perhaps the most significant performance advancement in SSD development.

According to AS SSD Benchmark:

X25-M 4k read random speed at QD 1 = 21MB/s

X25-M 4K random read IOPs at QD1 = 5,322 IOPs

X25-M read access time = 0.077ms

X25-M 4k write random speed at QD 1 = 45.17MB/s

X25-M 4k write IOPS at QD1 = 11,563 IOPs

X25-M write access time = 0.097ms

X25-M sequential reads = 251MB/s

X25-M Sequential writes - 103MB/s

Raptor 4K read random speed at QD 1 = 0.76MB/s

Raptor 4K random read IOPs at QD1 = 195 IOPs

Raptor read access time = 8.408ms

Raptor 4K random write speed at QD 1 = 1.48MB/s

Raptor 4K random write IOPS at QD1 = 378 IOPs

Raptor write access time = 2.677ms

Raptor sequential reads = 77.54MB/s

Raptor Sequential writes - 56.19MB/s

OCZ Core 4K read random speed at QD 1 = 7.07 MB/s

OCZ Core 4K random read IOPs at QD1 = 2,293 IOPs (Estimated)

OCZ Core access time = 0.436ms

OCZ Core 4K random write speed at QD 1 = 1.88 MB/s

OCZ Core 4K random write IOPS at QD1 = 400 IOPs (Estimated)

OCZ Core access time = 2.496ms

OCZ Core sequential reads = 208.44 MB/s

OCZ Core sequential writes - 120.44 MB/s

If Queue depth*(1/latency in ms) = IOPs

OCZ Core - Queue depth 1 x (1/0.002496) = Write 400 IOPs

Raptor - Queue depth 1 x (1/0.002677) = Write 373 IOPs

X25-M - Queue depth 1 x (1/0.000097) = Write 10,309 IOPs

400 4K write IOPs is more than what was observed for typical use yet the OCZ Core would appear to stutter under light load.

Native Command Queuing (NCQ)

Intel made a post to describe the benefit of NCQ.

"AHCI is a hardware mechanism that allows software to communicate with SATA drives. To make that transaction smoother, SATA devices were initially designed to handle legacy ATA commands so they could look and act like PATA devices. That is why many motherboards have legacy or IDE modes for SATA devices in that case users are not required to provide additional drivers during OS installation. However, Windows 7 ships with AHCI drivers built in, so soon this mode will no longer be necessary.

But this begs the question: what features does AHCI mode enable? The answer isn't simple, but one of the bigger advantages is NCQ, or native command queuing.

NCQ is a technology that allows hard drives to internally optimize the order of the commands they receive in order to increase their performance. In an SSD everything is different. There is no need to optimize the command queue, but the result of enabling NCQ is the same there is a performance increase. In brief, NCQ in an Intel SSD enables concurrency in the drive so that up to 32 commands can be executed in parallel.

Also take into consideration that the speed of the processor and the RAM also the amount of it will affect the performance of the Solid State Drive."

With NCQ enabled benchmarks show a significant increases in 4k reads/ writes @QD 32.

The impact NCQ on large file transfers is monitored in post #317. Here a performance increase can also be seen.

TRIM

Information on TRIM metrics that hIOmon can observe can be found here

An example of the TRIM related IO activity can be found in post # 148. An explanation of what is being observed can be found in post # 149.

In post # 185 Anvil was able to observe TRIM related IO activity and establish that TRIM was working in a software Raid 0 configuration.

In post # 131 Anvil was able to observe a big increase in the maximum response time due to a TRIM operation. A further explanation on this observation can be found in post # 134.

A separate thread on verifying TRIM functionality can be found here.

I have tried to provide accurate and relevant data in this summary. Please let me know if you see any mistakes or omissions.

A special thanks to overthere for all his help and assistance and for a great piece of software.Thanks also to Anvil for providing comparative data and all other posters that have helped along the way.

Reply With Quote

Reply With Quote

What is being monitored on the TRIM command? Is it recording when the OS sends the command signal or is it when the SSD actually deals with the command?

What is being monitored on the TRIM command? Is it recording when the OS sends the command signal or is it when the SSD actually deals with the command?

Bookmarks