hey guys, we were all n00bs at one point or another, and i believe there's a lot of little things here and there that new crunchers as well as long-time crunchers either overlook or didn't know about yet. here's a few things to keep in mind:

- GPUgrid can only crunch with nvidia cards, ATI cards are being tested right now

- windows XP will complete the WU faster than win7 or vista, hence higher PPD. this issue of slower times on vista/win7 is between MS and nvidia's drivers, so nothing we can really do

- finishing a work unit (WU) in less than 2 days will give 25% point bonus, and less than 1 day will give 50% bonus

- core and memory speed has negligible affect on the computation times, temps, and power. the number of shaders (stream processors) and its clocks will have a much higher impact on computation time

- the CPU speed will also affect your completion time

- generally, it's better to spread your GPU's between different computers than to try and fit as many as you can on one computer because they fight for resources (will provide link to my own system for reference)

- when using multiple GPU's on a computer, you should disable SLI

- 2gb system memory is about the max you'll need, 4gb for overkill (i ran 6 GPUs and 7 WCG work units with 2gb, and it was fine)

- pci-e bandwidth shouldn't matter (although can someone help me verify this?)

- a cooler running card can save up to 60watts of power at load (will link to my own testing). find a good balance between cooling and fan noise

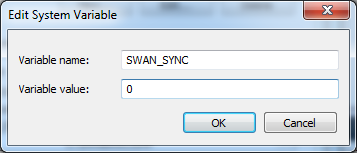

- using SWAN_SYNC in the environmental variables will give fermi cards a good 10-20% performance boost. this will dedicated a full CPU thread to the GPU. on any other cards, it's detrimental to performance

- GPU's at crunching load generally will draw about 60% of the rated TDP

- the 200 series cards are the most optimized right now. a gt240 is best in terms of PPD/watts and PPD/cost ratio. a gtx295 is currently the highest performing card, followed by a gtx480 just a hair behind. gtx260 and gtx275 is a good middle ground for PPD, cost, and power consumption.

- gts250's are horrible crunchers for the amount of shaders and clocks that it runs at. not sure what it is with these cards, but i have not seen a single one with good results

- you can use different cards from the 8/9, 200, and 400 series on the same computer, as long as you use the latest nvidia drivers

- for dedicated crunchers, turn off your screen saver and disable the "turn off monitor after..." settings because leaving these features on might cause certain GPU's to downclock to 2D mode. i'm not exactly sure why. just manually turn off your monitor

- for headless crunchers, don't use the MS remote desktop that comes with windows. this will instantly error out your WU. use UltraVNC or RealVNC instead for remote access

- example of a very high PPD card: gtx480 overclocked, CPU overclocked, win XP, SWAN_SYNC=0, hyperthreading disabled, no WCG

please post in here to help me add on to this list, or if you have questions about any of those i listed.

Reply With Quote

Reply With Quote

) I would guess that a host that had dedicated CPU resources would scale fairly well vs a host that had a single core for a single GPU free, so I would assume 4 x 480's with no CPU projects should scale within 1% - 3% of a single card with a dedicated thread. Now 8 GPU's with a 4c/8t CPU should be hurt by an extra 5% - 10% due to sharing 1 core with a HT. I don't think PCIE bandwidth could affect performance more than 1%, even in games slower PCIE bandwidths have minimal performance hits. I dunno, but thats how I would see the GPU scaling working out; anyone care to try it out? 7 x 480's in a machine? (and your own dedicated nuclear power plant

) I would guess that a host that had dedicated CPU resources would scale fairly well vs a host that had a single core for a single GPU free, so I would assume 4 x 480's with no CPU projects should scale within 1% - 3% of a single card with a dedicated thread. Now 8 GPU's with a 4c/8t CPU should be hurt by an extra 5% - 10% due to sharing 1 core with a HT. I don't think PCIE bandwidth could affect performance more than 1%, even in games slower PCIE bandwidths have minimal performance hits. I dunno, but thats how I would see the GPU scaling working out; anyone care to try it out? 7 x 480's in a machine? (and your own dedicated nuclear power plant  )

)

Bookmarks