I work for a government contractor. We supply equipment to them that uses an SSD. This equipment runs 24 hours a day, 7 days a week processing information. It has Win XP Pro so it is not an SSD “aware” OS.

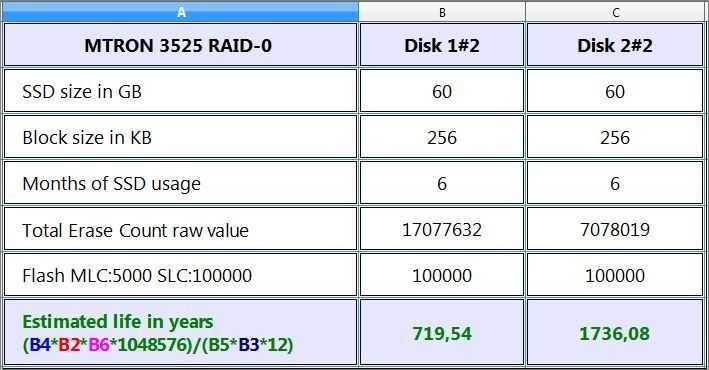

It is my understanding that the average read/write lifespan for each cell is approximately 100K.

My question is this:

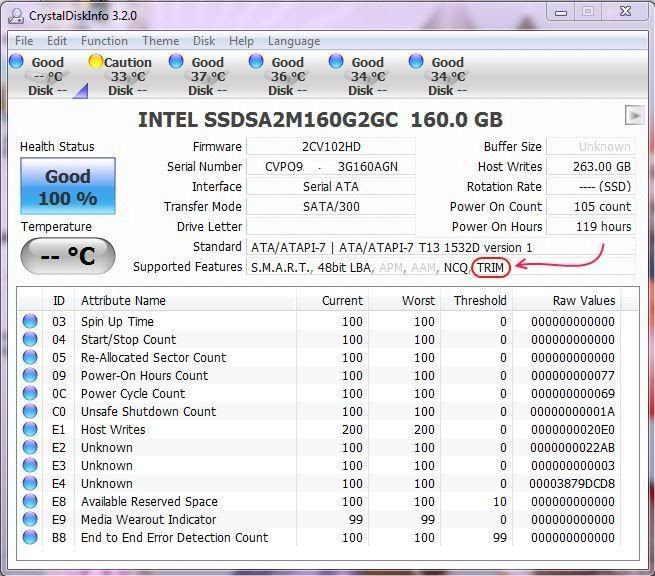

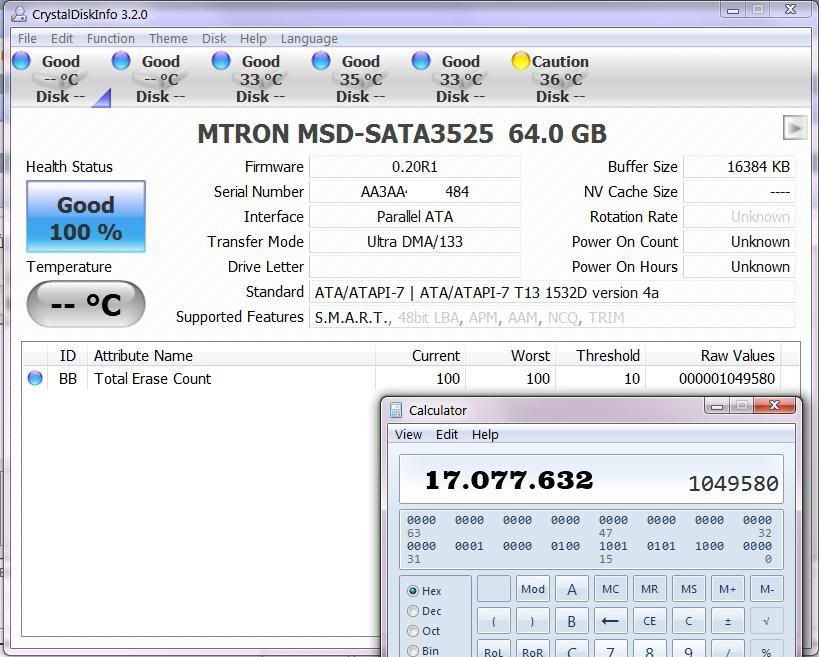

Is there a way to see this drive failing BEFORE it actually fails?

An example of this would be since we use a 32GB SSD, when I go to the drives properties it shows up as 29.8GB in Windows. As this drive starts failing because of individual cells going down, will this number change to reflect the current USABLE maximum space?

This would be a very easy way to tell that our SSDs are starting to fail.

What do you think? Is there a way to know before a drive fails?

Thanks in advance for your help

Reply With Quote

Reply With Quote

to them.

to them.

Some cell might fail after a few writes even.

Some cell might fail after a few writes even.

Bookmarks